How to Map & Transform 5M+ Row CSV Files When Excel Crashes

Quick Answer: How to Handle 5M+ Row CSVs

If you cannot open a file because it exceeds Excel's 1,048,576 row limit, you have four architectural options to process the data without crashing your memory:

- The Developer Way: Write a Python script using 'pandas' with 'chunksize' to stream the file in batches.

- The Database Way: Spin up a local SQL database and use a client like DBeaver to import the raw data, then clean it via SQL queries.

- The Unix Way: Use CLI tools like 'split', 'awk', or 'sed' to slice the file into smaller pieces (limited visibility).

- The SaaS Way: Use a streaming transformation platform like DataFlowMapper that visualizes headers and maps data without loading the full file.

The Problem: It's Not Just a Row Limit, It's a Memory Limit

Every data professional eventually hits the wall. You attempt to open a client's transaction history or a legacy system export, and Excel hangs, crashes, or displays the dreaded message: "File not loaded completely."

This happens because standard tools, including Excel and many browser-based "modern" CSV importers, attempt to load the entire dataset into RAM.

- Excel: Hard limit of 1,048,576 rows.

- Browser Importers: Often crash around 50MB-100MB because they rely on your browser's allocated memory.

When you are dealing with 5 million rows (often 1GB+), you cannot use memory-based tools. You need Streaming Architecture.

Here is a technical comparison of the authoritative ways to handle large-scale transformations.

Method 1: The Developer Way (Python & Pandas)

For teams with engineering resources, Python is the standard alternative. However, standard scripts will still crash your machine if you don't explicitly architect for streaming.

You must use the 'chunksize' parameter to process the file in batches.

import pandas as pd

# Process in chunks of 50,000 rows to avoid memory crashes

chunk_size = 50000

source_file = 'large_file_5m_rows.csv'

for chunk in pd.read_csv(source_file, chunksize=chunk_size):

# Apply transformation logic here

chunk['clean_date'] = pd.to_datetime(chunk['raw_date'], errors='coerce')

# Append to output

chunk.to_csv('cleaned_output.csv', mode='a', header=False)

Verdict:

- Pros: Free, infinite flexibility.

- Cons: The Maintenance Tax. For Implementation Teams, this is a trap. If you have 20 clients with 20 different file formats, you are now maintaining 20 custom scripts. If a client adds one column, your script breaks. Non-technical stakeholders cannot run or debug this.

Method 2: The Database Way (SQL & DBeaver)

If you are comfortable with SQL, you can turn this into a database problem. Tools like DBeaver allow you to import large CSVs into a local Postgres or MySQL instance relatively easily.

The Workflow:

- Install Postgres/MySQL locally.

- Create a table with a schema that matches your CSV (hoping you guess the data types right).

- Use DBeaver's "Import Data" wizard.

- Write SQL 'UPDATE' statements to clean the data.

- Export the query result.

Verdict:

- Pros: Powerful querying capabilities. DBeaver handles large imports well.

- Cons: Heavy Infrastructure. You are building a database server just to clean a spreadsheet. This is overkill for one-off imports. Furthermore, "Mapping" is rigid. If you map a CSV column to a 'INT' database column and one row has text, the entire import fails. Lack of Audit Trail. SQL updates are destructive. If you run a cleaning query wrong, you've altered your raw data. DataFlowMapper maintains a non-destructive mapping layer, so your source file stays pristine.

Method 3: The Unix Way (CLI Tools)

If you are a Linux/Mac power user, you can use command-line tools like 'split', 'sed', or 'awk'. These stream data by default and are incredibly fast.

The Workflow:

- Open Terminal.

- Use 'split -l 1000000 large_file.csv' to break the file into 5 Excel-friendly chunks.

- Open each chunk in Excel, make edits, save.

- Use 'cat' to merge them back together.

Verdict:

- Pros: Fastest method. Zero cost. Built into the OS.

- Cons: Blind Editing. You cannot see what you are doing. If you make a regex replace error in 'sed', you might destroy data in row 4,000,000 without knowing until the client complains. It solves the size problem but creates a visibility problem.

Method 4: The Data Transformation Platform (DataFlowMapper)

This is the modern approach for implementation teams who need the power of streaming without the overhead of maintaining scripts or databases.

DataFlowMapper handles 5M+ row files using a proprietary Server-Side Streaming Architecture. Unlike browser-based parsers, we process the stream on our backend, keeping your machine light.

Solving the "Blind Mapping" Problem

The hardest part of a 5M row file isn't just processing it; it's mapping it.

- The Issue: If you can't open the file in Excel, you can't see the headers. If you can't see the headers, how do you know that Column C is "Last Name"?

- The Solution (Header Extraction): DataFlowMapper reads only the first few bytes of the file. This allows us to extract headers and generate a visual mapping interface instantly, even if the file is 10GB. You can map columns, build logic, and validate data structures without ever loading the full file.

Finding the Needle in the Haystack (The 1% Rule)

If a 5 million row file has a 1% error rate, that is 50,000 errors.

- Python/CLI: You get a log file with 50,000 lines of text. Good luck finding patterns.

- DBeaver: You have to write complex 'WHERE' clauses to find issues.

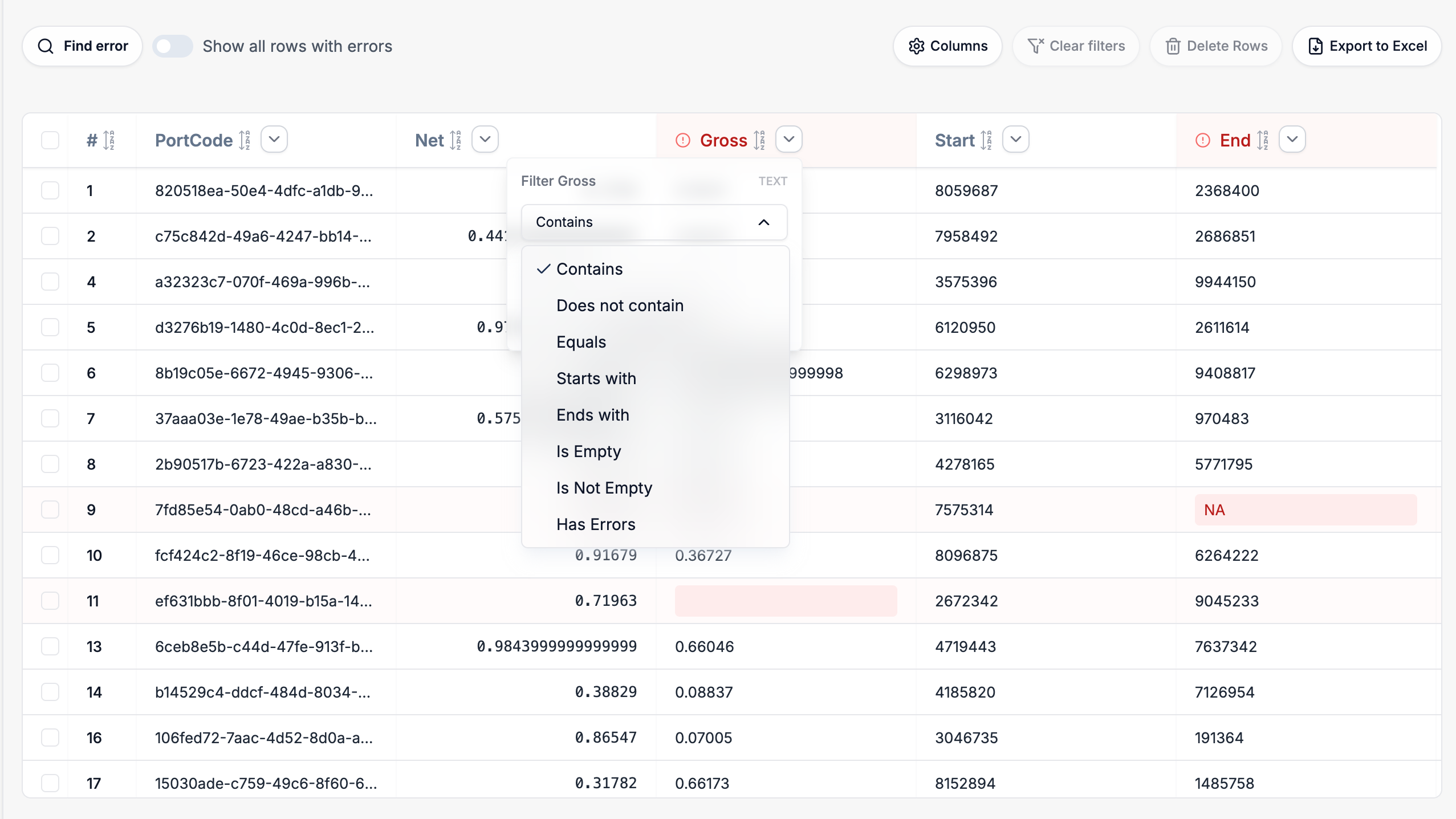

- DataFlowMapper: We provide a Paginated Data Viewer that gives you a granular breakdown of exactly which rows failed and why. You can filter by 'Status = Error' to see a dedicated table of just the problematic rows with the specific validation error highlighted (e.g., "Date format must be YYYY-MM-DD"). This lets you spot patterns like "Oh, every row from the 'New York' office has a bad date format," fix the logic globally, and re-run.

Comparison: Selecting the Right Tool

| Feature | Excel | Python Scripts | DBeaver / SQL | DataFlowMapper |

|---|---|---|---|---|

| Max Rows | ~1 Million | Unlimited | Unlimited | Unlimited |

| Prerequisites | MS Office | Python/Pandas installed | Local DB + SQL knowledge | None (Browser) |

| Mapping Experience | Visual | Code-based | Schema Definition | Visual & Automapped |

| Error Visibility | High | Low (Logs) | Medium (Queries) | High (UI Filters) |

| Team Scalability | Low | Low (Siloed code) | Medium | High (Shared Templates) |

Conclusion

Don't let tools dictate your data limits. When Excel crashes, it is a signal to move from spreadsheet software to data transformation software.

While tools like Python and DBeaver are powerful for individuals, DataFlowMapper offers the only solution that combines streaming power with visual mapping and team collaboration.

Start your free trial today and map your first 5M+ row file in minutes.

Frequently Asked Questions

Why does Excel crash with large CSV files?▼

Excel has a hard limit of 1,048,576 rows per sheet. Attempting to load files larger than this causes data truncation or memory crashes because Excel attempts to load the entire dataset into RAM.

How can I map columns in a large CSV without opening it?▼

Tools with 'Header Extraction' capabilities, like DataFlowMapper, read only the first few bytes of a file to visualize the schema. This allows you to map columns and build logic without loading the full 5GB+ file into memory.

Is DBeaver good for large CSV files?▼

DBeaver is excellent for loading CSVs into a database, but it requires setting up a SQL database first. It is not a transformation tool; it is a database client. For cleaning and mapping, you would need to write complex SQL scripts.

The visual data transformation platform that lets implementation teams deliver faster, without writing code.

Start mappingNewsletter

Get the latest updates on product features and implementation best practices.