Data Migration Cost Analysis & Calculator (2025 Analysis)

The True Costs of Data Migration: A Quantitative Analysis for Implementation Teams & Consultants

Executive Summary: Data migration, a critical component of digital transformation, legacy system conversion, and new software rollouts, is fraught with complexity and significant financial risk. Industry data paints a sobering picture: a vast majority of data migration projects—often cited as over 80% [1, 3, 2]—run over budget and past deadlines, with a substantial percentage failing to meet their core objectives. For implementation teams, data onboarding specialists, and consultants tasked with navigating these projects, particularly those involving intricate CSV, Excel, and JSON datasets common in customer data validation and onboarding scenarios, a quantitative understanding of the true costs across the entire data migration process is not just beneficial, it's essential for survival and success. This analysis dissects the real costs, examining time allocation across phases, the financial penalties of errors and rework (especially when dealing with complex transformation logic), the often-overlooked opportunity costs of delays, the severe impact of downtime, and the ROI implications of different data migration methodology choices and the ETL tools for data migration employed. We provide data-backed insights specifically tailored for professionals handling these complex data conversion challenges.

Key Takeaways & Statistics:

- High Failure & Overrun Rates: Between 30% and 83% of data migration projects fail or significantly miss targets [1, 2, 3]. Budget overruns average 14-30% [2, 3, 20], while schedule delays average a staggering 30-41% [2]. These figures often mask even higher costs for projects involving complex file transformations.

- Planning Dominates Effort: The pre-migration phase (detailed planning, data assessment, strategy, tool selection) consumes 50-70% of total project effort [8, 61]. Skimping here, especially on understanding source data quality and transformation complexity, directly leads to downstream cost explosions.

- Data Quality is Foundational: Poor source data quality (incompleteness, inconsistency, duplicates in files) is a primary culprit behind errors, costly rework cycles, and project delays [1]. Early investment in data cleaning software and robust validation processes is paramount, particularly before complex logic is applied.

- Complexity Amplifies Costs: Sheer data volume matters, but complexity—especially in transforming unstructured/semi-structured data like CSV/Excel, handling nested JSON, implementing intricate business logic, or integrating with legacy systems—is often a more significant cost driver than volume alone [3, 8, 19, 47]. Manual mapping and logic building for complex rules significantly inflate effort.

- Downtime Costs are Crippling: Unplanned downtime during migration can cost businesses anywhere from $137-$427 per minute (small businesses) to over $9,000 per minute (large enterprises) [40], excluding indirect costs like reputational damage [22, 38]. Minimizing this is critical.

- Strategy & Tooling Dictate ROI: "Big Bang" migrations offer potential speed but carry immense risk. Phased approaches are safer but traditionally slower and costlier. Modern data migration tools, including comprehensive data onboarding platforms with features like AI data mapping and integrated data validation automation, can significantly optimize phased strategies, balancing risk and accelerating ROI [12, 13].

- Modern Tooling is Non-Negotiable for Complexity: Relying on manual processes, basic scripts, or simple importers for complex migrations involving transformation rules is inefficient and error-prone. Leveraging platforms offering flexible data transformation software (visual logic builders, code flexibility like Python), AI data mapping tools, robust validation, and seamless API/DB connectivity is crucial for efficiency, accuracy, and cost control in demanding data onboarding scenarios.

Introduction: Understanding Data Migration Costs: Strategic Imperative & Financial Reality

Data migration—the systematic transfer of data between storage systems, formats, or applications [14]—is far more than a technical exercise. It underpins critical business transformations, enables cloud adoption, facilitates mergers and acquisitions, allows for legacy conversion, and powers the implementation of new software solutions [9, 15]. Whether onboarding new clients using a sophisticated csv importer with etl capabilities, migrating historical financial data via Excel files, or consolidating disparate systems using JSON APIs, the success of these strategic initiatives hinges on the ability to move data accurately, efficiently, and securely.

Yet, the path is notoriously treacherous, especially for implementation teams and consultants dealing with the messy reality of client-provided data in formats like CSV, Excel, and JSON. Gartner's research suggests a stark 83% of data migration projects either fail outright or significantly exceed their allocated budgets and timelines [2]. Findings from Oracle and The Bloor Group corroborate this, indicating failure or overrun rates surpassing 80%, with average cost overruns hovering around 30% and schedule slippage averaging 41% [3, 2]. McKinsey further quantifies this, noting an average cost increase of 14% compared to initial projections [20].

Why such dismal statistics, particularly when the goal seems straightforward – move data from A to B? The complexity, and therefore the cost, arises from a confluence of factors acutely felt by implementation professionals:

- Data Heterogeneity & Format Quirks: Data residing in disparate sources and formats (databases, flat files like CSV/Excel with inconsistent delimiters or headers, complex nested JSON, APIs) requires flexible handling [15]. Simple tools often break on real-world file variations.

- Poor Data Quality: Pre-existing issues like duplicate records, inconsistent formatting (dates, addresses), missing values, and embedded errors within files only surface during migration, demanding significant data cleaning software or manual effort [1].

- Complex Transformations & Business Logic: The need to not just move, but reshape, cleanse, enrich, and validate data according to intricate business rules (e.g., mapping transaction types, applying conditional logic based on client tiers, calculating derived fields) requires powerful data transformation software, not just basic mapping [35]. This is often the most underestimated cost driver in file-based onboarding.

- Operational Disruptions: The risk of significant downtime impacting business continuity and customer experience during cutover [8, 20].

- Hidden Dependencies & Integration Needs: Undocumented relationships between data sets or the need to integrate with legacy applications or external APIs via lookups during the migration process cause unforeseen issues and require flexible connectivity [1, 24, 41].

- Scale and Velocity: The sheer volume and increasing velocity of enterprise data make manual or simplistic approaches untenable, demanding efficient, repeatable processes [16].

These challenges underscore the urgent need for a quantitative, structured approach to understanding and managing data migration costs, particularly for the implementation teams and consultants on the front lines of the data onboarding process who need efficient, reliable data onboarding tools.

Deconstructing Data Migration Costs: A Phased Process Breakdown

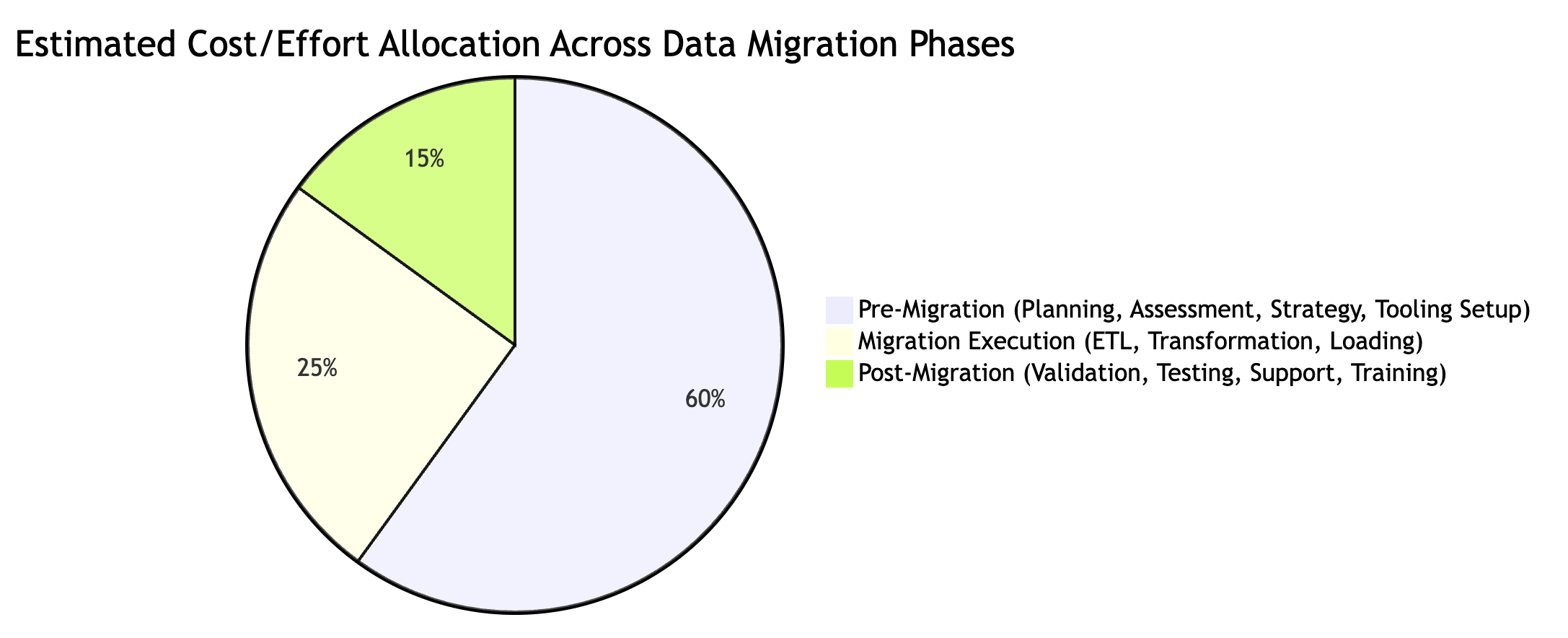

To effectively budget and manage resources, it's crucial to understand where costs accumulate throughout the typical data migration process. While project specifics vary, costs generally fall into three main phases [8]. Understanding this allocation is vital for justifying investment in planning and tooling.

Figure 1: Illustrative Cost/Effort Allocation in Data Migration Projects (Based on [8, 61]). Note: Pre-migration often consumes the largest share of effort and planning time, setting the stage for downstream execution costs. Underinvestment here directly inflates execution and post-migration costs.

Figure 1: Illustrative Cost/Effort Allocation in Data Migration Projects (Based on [8, 61]). Note: Pre-migration often consumes the largest share of effort and planning time, setting the stage for downstream execution costs. Underinvestment here directly inflates execution and post-migration costs.

Phase 1: Pre-Migration Planning & Assessment Costs: The Foundation (Est. 50-70% of Effort/Planning Time)

This foundational phase is often underestimated in terms of required rigor but consumes the most significant portion of planning effort and time. Errors or shortcuts here invariably lead to exponential cost increases later, especially when dealing with complex file formats and logic.

- Strategic Planning & Impact Assessment: Defining unambiguous goals, scope boundaries, realistic timelines, and the overall data migration methodology (e.g., Big Bang vs. Phased). Critically, this includes a pre-migration impact assessment to validate assumptions and base cost estimates on concrete details [8, 27]. Lack of clarity here is a primary driver of the 30%+ average cost overruns seen in projects [15]. For file-based migrations, this means defining acceptable formats, required fields, and validation rules upfront.

- In-Depth Data Assessment & Profiling: This is arguably the most critical pre-migration activity [27]. It involves "landscape analysis"—deeply understanding the source data's structure, meaning, context, relationships, and, crucially, its quality [8]. This is especially vital for less-structured formats like CSV/Excel, where inconsistencies hide easily (e.g., varying date formats, inconsistent use of quotes), or complex nested JSON requiring specialized parsing. Utilizing automated data profiling tools and robust data cleaning software at this stage identifies duplicates, inconsistencies, and errors before they derail the migration, drastically reducing rework [1]. Quantifying data quality issues early helps justify the need for cleansing tools or transformation logic.

- Tool & Technology Stack Selection: Choosing the appropriate best data migration tools is paramount. This isn't just about moving data; it's about efficient transformation, validation, and management, especially when transformation logic is involved. Considerations include:

- Support for specific source/target formats (e.g., robust csv importer with etl, ability to convert xls to csv or convert json to xlsx accurately, handling various delimiters and encodings).

- Powerful and flexible transformation capabilities (visual builders for accessibility, scripting like Python for complex logic, handling lookups). This is where simple importers fail and dedicated data transformation software shines.

- Integrated data validation automation features to catch errors before loading.

- AI data mapping capabilities (ai data mapping tools) to accelerate setup and reduce manual mapping effort.

- Scalability to handle data volumes without performance degradation.

- API/Database connectivity options for lookups or pushing data post-transformation. Modern data transformation platforms or data onboarding platforms often provide significant advantages over outdated methods, custom scripts, or basic data import tools, especially for complex, repeatable requirements [8, 34]. Software license and subscription costs must be factored into the budget [8].

- Team Assembly & Role Definition: Building the right team with the necessary skills (source/target system knowledge, transformation logic expertise, data conversion experience) or engaging qualified external consultants ($100-$300+/hour [46]) is vital [8, 29]. Ensuring the team is proficient with the chosen migration software is key [29].

Quantitative Insight: Studies on storage migrations reveal that planning activities alone can account for 70-80% of the internal resource hours allocated per host [61]. While file-based migrations differ, this underscores the intensity required in the pre-migration phase to prevent costly downstream failures when dealing with complex data structures and transformations.

Phase 2: Migration Execution Costs: ETL, Transformation & Validation (Est. 20-30% of Effort/Cost)

This is where the actual data movement and transformation occur, and where inadequate planning or tooling manifests as tangible costs and delays.

- ETL Processes (Extract, Transform, Load): The heart of the migration. Extracting data from sources (files, APIs, DBs), applying transformations (cleansing, enrichment, applying business rules, mapping fields using data mapping tools), and loading it into the target system [8, 35]. The complexity here is a major cost driver. Handling intricate transformation logic within CSV/Excel files (e.g., conditional mapping based on multiple columns), accurately mapping nested JSON structures, or performing complex calculations significantly increases effort and requires sophisticated data transformation software. Tools offering reusable transformation templates, visual logic builders (like no code data transformation interfaces), and efficient processing engines are critical for controlling costs and ensuring consistency in repeatable data onboarding tasks.

- Software Licenses & Infrastructure Costs: Ongoing costs for migration platforms, ETL tools for data migration, specialized validation tools, security software, and cloud platform usage (compute, storage, potentially high data egress/transfer charges [36]) [8, 25]. Migrating to new systems, especially cloud platforms, may also necessitate core infrastructure upgrades (servers, network bandwidth) [8].

- Integrated Data Validation & Security: Implementing checks and balances during the ETL process using data validation automation ensures data integrity before loading. This is far more cost-effective than post-load cleanup, especially when complex business rules define validity. Simultaneously, implementing robust security measures (encryption in transit and at rest, access controls) to protect sensitive data and comply with regulations (GDPR, HIPAA, etc.) is a non-negotiable cost factor [8, 37].

- Downtime Costs & Mitigation Efforts: Planning and executing the migration cutover to minimize business disruption. This often involves scheduling during off-peak hours (requiring overtime or specialized scheduling) or implementing strategies that allow for near-zero downtime [8, 26]. The direct and indirect costs of unplanned downtime can be astronomical (detailed below), making efficient, reliable execution paramount.

Phase 3: Post-Migration Costs: Validation, Testing & Long-Tail Expenses (Est. 10-20% of Effort/Cost)

The project doesn't end once the data is loaded. Ensuring the migration delivered value and the new system is stable involves ongoing costs.

- Rigorous Validation & Testing: Comprehensive testing beyond basic checks is essential. This includes unit testing transformations, integration testing with other systems, User Acceptance Testing (UAT), performance testing, and data reconciliation to confirm accuracy and completeness [8]. Insufficient testing, especially of complex business logic applied during transformation, is a common cause of post-migration problems and hidden costs. Tools with strong, auditable validation reporting are invaluable here.

- Ongoing System Maintenance & Support: Budgeting for the continuous costs of maintaining the new platform, including software updates, patches, bug fixes, performance tuning, and technical support [8, 25]. If the migration involved custom scripts, maintaining them becomes an ongoing burden.

- User Training & Change Management: Effective training is crucial for user adoption and realizing the anticipated benefits of the new system [8, 44]. Underestimating change management leads to low ROI.

- Addressing Hidden Costs & Integration Challenges: Contingency planning is vital. Unexpected issues frequently arise, such as integrating the new system with other legacy applications or third-party services via APIs, requiring additional development or configuration [8, 46, 41]. Ensuring the chosen data migration tools offer flexible API and database connectivity can mitigate some of these integration surprises. A contingency budget of 10-20% is typically recommended [8].

Unpacking Data Migration Cost Drivers: Volume, Complexity, and Transformation Rules

Understanding the variables that inflate costs allows implementation teams and consultants to better anticipate challenges, select appropriate tools, and budget accordingly:

- Data Volume vs. Data Complexity: While large volumes increase transfer times and infrastructure needs [3, 47], complexity is often the more significant cost driver, especially in file-based migrations handled by implementation teams. Migrating terabytes of simple, clean, flat files can be less expensive than migrating megabytes of poorly structured, inconsistent CSV/Excel data or deeply nested JSON requiring intricate parsing and transformation logic [47, 36]. Each unique transformation rule, validation check, mapping exception, or required data conversion (e.g., convert xls to csv, convert json to xlsx) adds significant development, testing, and potential debugging effort, especially if done manually or with inadequate tools.

- Source System Constraints & Target Platform Demands: Migrating from archaic, poorly documented legacy systems often requires specialized (and expensive) expertise and involves significant reverse-engineering effort [8, 48]. Even with files, understanding implicit business rules embedded in legacy export formats is challenging. Compatibility issues between source data formats and target system requirements (e.g., strict schemas, API protocols) dictate transformation needs and complexity [19].

- Transformation Logic Intensity: The sheer number and sophistication of business rules that must be embedded within the migration process heavily influence cost. Simple one-to-one data mapping is relatively straightforward. However, conditional logic (IF/THEN/ELSE based on multiple fields), data enrichment via lookups (potentially requiring real-time API or database calls using features like 'remotelookup'), complex calculations, data standardization (e.g., addresses, phone numbers), and format conversions demand powerful, flexible data transformation software. Tools supporting both visual configuration (easy data transform) and scripting (like Python) offer the best balance, significantly reducing development and testing time compared to pure coding or overly simplistic interfaces.

- Integration Web: The need to connect the migration process or the target system with other enterprise applications (CRMs, ERPs, data warehouses, external APIs) introduces significant complexity and cost [19, 47, 41]. Each integration point requires design, development, testing, and ongoing maintenance. Tools with pre-built connectors or robust, configurable API/DB interaction capabilities (for both pulling lookup data and pushing results) can substantially reduce this burden.

- Data Quality Baseline & Validation Rigor: Starting with poor quality source data necessitates substantial investment in data cleaning software and manual remediation efforts, often discovered late in the process [1]. Furthermore, complex customer data validation requirements (e.g., verifying addresses against external services, checking for business rule consistency across records, ensuring referential integrity against target system data via lookups) demand sophisticated data validation automation capabilities within the migration workflow, adding to development and execution time. The ability to build custom validation rules easily is critical.

- Migration Strategy (Big Bang vs. Phased): As detailed later, the fundamental approach chosen has profound implications for cost, risk profile, required downtime, and the speed at which ROI is realized [12].

- Resource Model (Internal Expertise vs. External Consultants): Leveraging internal teams may have lower direct hourly costs but can lead to significant delays and errors if specialized skills (e.g., complex legacy conversion, AI data mapping, specific migration software expertise) are lacking. Engaging external consultants provides targeted expertise but adds direct costs, often ranging from $100-$300+ per hour depending on the required skill level [8, 46, 47]. Efficient tooling can empower internal teams and reduce reliance on expensive external resources.

Quantifying the Financial Fallout: A Statistical Reality Check for Implementation Projects

The high rates of failure and overruns aren't just statistics; they represent significant, often crippling, financial consequences that directly impact implementation project budgets and timelines.

The High Cost of Data Migration Overruns and Failures

| Metric | Average Percentage Range | Key Source(s) | Core Implication for Implementation Teams Handling Complex Files |

|---|---|---|---|

| Budget Overrun | 14% - 30%+ | [2, 3, 20] | Expect costs to exceed initial estimates; robust contingency is essential, especially when transformation logic is complex. |

| Schedule Overrun | 30% - 41%+ | [2] | Timelines are frequently underestimated; buffer adequately for data cleaning, logic development, and validation cycles. |

| Project Failure/Shortfall | 30% - 83% | [1, 2, 3] | High risk of not meeting objectives; meticulous planning, robust tooling & thorough validation needed. |

Table 1: Common Data Migration Project Overruns and Failures – Financial & Temporal Impact

Why this happens in file-based onboarding: Errors discovered late—often stemming from inadequate initial data assessment (not catching format variations in CSV/Excel), underestimated transformation complexity (complex business rules requiring custom code), or insufficient validation [1]—are exponentially more expensive to fix than those caught early. This rework directly inflates budgets and pushes out timelines. Investing heavily in upfront analysis, utilizing data cleaning software or transformation tools with cleaning capabilities, and implementing continuous data validation automation throughout the process are critical cost containment strategies. Manual processes amplify these risks.

Calculating the Opportunity Costs of Delayed Data Migration & Onboarding

Project delays don't just increase direct migration costs; they create a significant opportunity cost by postponing the benefits of the new system (e.g., enhanced analytics, improved operational efficiency, faster client onboarding) and prolonging the inefficiencies and maintenance costs of the legacy system or manual processes [9, 10]. This cost of delay can sometimes exceed the direct migration budget itself, especially when client onboarding is stalled.

| Scenario Example | Estimated Monthly Benefit/Saving | Delay Duration | Estimated Opportunity Cost | Potential Source(s) |

|---|---|---|---|---|

| Delayed Go-Live for New CRM/Asset Mgmt System | $50,000 Revenue/Efficiency Gain | 3 Months | $150,000 | [cf. 64, 10] |

| Continued Manual Data Entry/Correction Effort | $10,000 Labor Cost/Month | 6 Months | $60,000 | [cf. 41, 9] |

| Missed Client Onboarding due to Slow Process | $20,000 Revenue/Client/Month | 4 Months Delay | $80,000 (per client) | [cf. 63, 10] |

Table 2: Illustrative Examples of Quantifying Opportunity Costs from Migration Delays

Implication: Choosing an efficient data migration methodology and leveraging the best data migration tools that accelerate the entire data onboarding process—through features like reusable logic templates, AI data mapping, parallel processing, efficient validation cycles, and streamlined error handling—directly minimizes these opportunity costs and speeds up the realization of business value. Faster, more reliable onboarding translates directly to faster revenue.

Estimating Data Migration Service Costs (Consultants & Implementation)

Engaging external expertise often involves substantial costs, heavily influenced by data volume, transformation complexity, integration needs, and the required level of expertise. While project-specific, these illustrative ranges provide context, particularly relevant when considering consultant-led migrations:

| Project Size | Est. Data Volume | Est. Implementation Hours | Est. Cost Range (Services Only) | Key Influencing Factors & Sources |

|---|---|---|---|---|

| Small | < 10k records | 10-50 hours | $2,000 - $15,000 | Simple mapping, basic validation, minimal logic [cf. 47, 71, 43] |

| Medium | 10k - 100k records | 50-200 hours | $15,000 - $60,000 | Moderate complexity (CSV/Excel), some business logic, standard integrations [cf. 47, 71, 46] |

| Large | > 100k records | 200+ hours | $60,000+ | High complexity (nested JSON, intricate logic), extensive validation, custom integrations [cf. 47, 71, 46] |

(Note: Based on typical consultant rates ($100-$300+/hour [46, 43]). Excludes software data migration license costs, which can also be significant.)

Table 3: Illustrative Implementation Service Cost Ranges Based on Complexity and Scale

Implication: Precise scope definition is critical. Utilizing efficient data onboarding platforms or ETL tools for data migration that empower internal teams with features like visual builders and AI data mapping tools, or that streamline consultant tasks by providing reusable components and robust validation, can significantly optimize these service costs. The goal is to reduce the hours required, regardless of who performs the work.

The Crippling Financial Impact of Data Migration Downtime

Perhaps the most dramatic cost is associated with unplanned downtime during the migration cutover. This disrupts operations, halts revenue generation, kills productivity, and can severely damage customer trust and brand reputation [8]. While full system downtime might be less common in phased file onboarding, errors leading to data unavailability or process halts have similar impacts.

| Company Size | Average Cost Per MINUTE of Downtime | Est. Downtime/Disruption (Hours) | Potential Direct Financial Cost Range | Key Source(s) | Indirect Costs (Not Included) |

|---|---|---|---|---|---|

| Small | $137 - $427 | 1-4 | $8,220 - $102,480 | [40] | Reputational damage, customer churn, recovery effort |

| Medium/Large | $5,600 - $9,000+ | 1-4 | $336,000 - $2,160,000+ | [40, 22] | Compliance penalties, stock price impact, competitive disadvantage |

(Note: Costs per minute can be significantly higher in sectors like finance, e-commerce, and healthcare [22, 38])

Table 4: The Astronomical Financial Impact of Data Downtime/Disruption During Migration

Implication: Minimizing disruption is paramount. This requires meticulous planning, selecting the appropriate migration strategy (often phased or iterative for onboarding), and employing tools and techniques (like robust validation before loading, delta processing, or near real-time replication where applicable) designed for minimal disruption and fast error resolution.

Choosing Your Data Migration Methodology: Cost, Risk & ROI Comparison

The fundamental strategy chosen for the migration cutover profoundly impacts cost, risk exposure, downtime requirements, and the timeline for achieving return on investment [12]. While often discussed for large system migrations, the principles apply to data onboarding strategies as well.

Figure 2: Comparison of Data Migration/Onboarding Strategies (Adapted from [12, 13, 28, 53])

Figure 2: Comparison of Data Migration/Onboarding Strategies (Adapted from [12, 13, 28, 53])

- All-at-Once ("Big Bang"):

- Concept: Migrate/onboard everything within a single, short cutover window.

- Pros: Potentially the fastest timeline and lowest direct cost if everything executes flawlessly; immediate availability of the full new system/data set [12, 13].

- Cons: Extremely high risk – a single major issue (e.g., a flaw in transformation logic affecting many records) can cause catastrophic failure and extended, costly downtime or data corruption; requires intensive resource mobilization; rollback is often complex and difficult; insufficient time for thorough parallel testing [12, 13]. Generally unsuitable for complex migrations or mission-critical systems where data integrity is paramount.

- Phased Migration (Module-by-Module, Subset-by-Subset):

- Concept: Migrate/onboard the system/data in logical, manageable chunks over time. Old and new systems/processes often run in parallel.

- Pros: Significantly lower risk due to incremental changes; allows for thorough testing and validation of each phase; enables minimal or even zero downtime; facilitates gradual user adaptation and training [12, 13].

- Cons: Longer overall project duration; potentially higher total cost due to extended parallel system maintenance, the need for temporary data synchronization mechanisms, and prolonged project management overhead [13]. Full ROI is realized more slowly.

- Hybrid / Iterative Approaches (Tool-Optimized):

- Concept: Combines elements of both, heavily leveraging modern tooling. Examples include phased rollout by client type or data source, or iterative batch processing of files using reusable transformation logic and validation rules [28, 53].

- Pros: Aims to balance the speed/cost benefits of Big Bang with the risk mitigation of Phased; highly adaptable to specific project needs. Modern ETL tools for data migration and data onboarding platforms that support reusable transformation artifacts (mappings, logic, validation rules), efficient delta/incremental processing, and robust automation can make phased and iterative approaches significantly more cost-effective and faster than traditional methods.

- Cons: Success heavily relies on the capabilities and effective use of the chosen data migration tools. Requires discipline in defining reusable components.

Implication for Implementation Teams: The optimal strategy is context-dependent. It requires a careful assessment of the organization's risk tolerance, the complexity of the data and transformations, the business impact of errors or delays, and, critically, the capabilities of the available migration software. For complex data onboarding involving diverse formats (CSV/Excel/JSON) and critical business logic, tool-assisted phased or iterative strategies often provide the most pragmatic balance of controlled risk, efficient delivery, and faster time-to-value.

A Practical Framework for Estimating Your Data Migration Costs (Focusing on File-Based Onboarding)

While no universal formula exists due to project uniqueness, implementation teams and consultants can use this structured framework to develop more realistic and defensible cost estimates, particularly for file-based data onboarding and migration:

- Define Scope & Objectives Rigorously:

- Goals: What defines success? (e.g., successfully onboard X clients per month, migrate Y legacy files, achieve Z% data accuracy).

- Sources: Identify all expected source file formats (CSV variants, specific Excel structures, JSON schemas, APIs) and target systems/formats.

- Boundaries: What's in scope vs. out of scope? (e.g., historical data depth, specific data domains, level of data cleansing expected).

- Conduct Deep Data Assessment (Per Source Type):

- Volume Metrics: Avg/Max number of records/files per source, total data size (GB/TB).

- Complexity Rating (Low/Medium/High): Assess structure (flat vs. nested/relational), number of distinct file layouts, known relationships. Pay special attention to CSV/Excel inconsistencies (header variations, delimiters, encoding) and JSON depth/variability.

- Quality Baseline (Good/Fair/Poor): Use profiling tools (or manual inspection for samples) to quantify completeness, accuracy, consistency, duplicates, format variations. Estimate required cleaning/remediation effort.

- Analyze Transformation & Validation Needs:

- Mapping Complexity: Estimate % of fields needing simple 1-to-1 vs. complex logic (conditional, lookups, calculations, splits/merges). Use data mapping automation tools if available.

- Transformation Logic Intensity (Low/Medium/High): Categorize the sophistication of required business rules. Does it require basic formulas, moderate conditional flows, or complex custom scripting (e.g., Python) and external API/DB calls (e.g., for enrichment or reference data)? How many unique, complex rules are there?

- Validation Rigor (Low/Medium/High): Define necessary checks (data type/format, range, uniqueness, referential integrity, cross-record consistency, external lookups). How many custom validation rules are needed? Estimate complexity of building these rules.

- Evaluate Tooling & Infrastructure Requirements:

- Identify necessary software categories: ETL tools for data migration, data transformation software, data validation automation tools, data mapping tools, data cleaning software, or an integrated data onboarding platform. Does the tool handle the required complexity and formats?

- Estimate license/subscription costs based on usage/volume/features/users. Compare build vs. buy for specific functionalities.

- Assess infrastructure needs (compute power, storage, network bandwidth, cloud service costs) and associated setup/running costs.

- Plan Resources & Estimate Effort (Iterative):

- Break down the project into phases/key tasks (Planning, Design, Development per source/target, Testing, Deployment, Post-Go-Live Support).

- Estimate person-hours/days required for each task based on complexity assessments (e.g., hours per complex transformation rule, hours per validation rule). Factor in reuse potential with good tooling.

- Determine team mix (internal SMEs, developers, testers, external consultants) and apply appropriate loaded hourly rates. Include project management overhead (typically 10-15%).

- Factor in Risk & Allocate Contingency:

- Identify key risks (e.g., unexpected data quality issues in new files, underestimated transformation complexity, integration failures, scope creep, key personnel availability, target system changes).

- Assign impact/likelihood scores.

- Allocate a contingency budget (typically 10-25% of the base estimate, depending on risk assessment and complexity) to cover unforeseen issues.

Benefit: This detailed, bottom-up approach forces consideration of all major cost drivers specific to complex file migrations, leading to far more accurate and justifiable estimates than high-level guesses based purely on data volume or generic project types.

Estimate Your Project Cost

Use the calculator below to get a heuristic estimate based on your specific volume and complexity.

Data Migration Cost Estimator

Estimate budget based on volume & complexity

Proactive Strategies for Mitigating Costs in Complex Data Migration & Onboarding (Strategies & Tools)

While data migration, especially involving complex transformations, inherently involves risks, implementation teams can significantly reduce costs and improve success rates through proactive strategies and leveraging modern technology:

- Invest Disproportionately in Upfront Planning & Assessment: This cannot be overstated. Thoroughly understanding the source data (using profiling tools), meticulously defining scope, transformation rules, and validation requirements, and resisting scope creep are the most effective ways to prevent downstream disasters [1, 8]. Run proof-of-concepts for complex transformations or tool evaluations early. Define data onboarding best practices for your projects.

- Make Data Quality a Prerequisite, Not an Afterthought: Implement data governance practices and perform data cleansing before or during the transformation process. Utilize data cleaning software or migration tools with strong built-in cleansing and standardization capabilities [1]. Fixing data issues systematically within the tool is orders of magnitude cheaper than manual post-migration cleanup or dealing with load failures.

- Embrace Modern, Flexible Migration Tooling: Move beyond manual scripting, complex Excel macros, or basic import wizards for anything beyond trivial migrations. Employ capable ETL tools for data migration, dedicated data transformation software, or comprehensive data onboarding platforms. Prioritize tools that offer:

- Automation: Features like AI data mapping suggestions or AI-assisted logic generation reduce manual effort and errors significantly.

- Flexibility: Support for diverse data formats (CSV variants, Excel sheets, complex/nested JSON, APIs, Databases) and transformation approaches (visual no code data transformation builders and scripting capabilities like Python for complex edge cases) [8]. The ability to handle real-world data imperfections is key.

- Integrated Validation: Robust data validation automation capabilities that can be configured and executed within the transformation workflow, providing immediate feedback and clear error reporting.

- Reusability: The ability to create and reuse mapping templates, transformation logic components (functions, snippets), and validation rule sets accelerates development for subsequent projects or recurring onboarding tasks and ensures consistency.

- Connectivity: Seamless integration with various source/target systems via built-in connectors or configurable API/DB interfaces for lookups or data pushing.

- Implement Continuous, Integrated Testing & Validation: Don't wait until the end. Test transformations incrementally (unit testing logic components), validate data outputs at each stage against defined rules, perform integration testing early and often (e.g., test API lookups), conduct thorough UAT with business users on sample outputs, and execute performance/load testing where applicable [8]. Tools with embedded validation and clear, actionable error reporting streamline this critical activity.

- Select the Optimal Migration Strategy: Consciously choose the approach (Big Bang, Phased, Hybrid/Iterative) that best aligns with the project's risk profile, complexity, and business constraints. Critically evaluate how the chosen migration software can optimize the selected strategy (e.g., enabling efficient reusable components and validation for a phased/iterative approach) [12].

- Maintain Realistic Contingency Buffers: Acknowledge that unforeseen issues will arise, especially with client data or complex logic. Secure stakeholder buy-in for a contingency budget (10-25%) to handle unexpected complexities, integration hurdles, or data quality surprises without derailing the project [8, 46].

Conclusion: Transforming Data Migration from High-Risk Gamble to Predictable Value Driver

Data migration, particularly the complex data onboarding and legacy conversion projects faced by implementation teams and consultants, remains a challenging and often underestimated undertaking. The quantitative evidence clearly demonstrates that inadequate planning, unaddressed data quality issues in source files (CSV, Excel, JSON), the inherent complexity of translating business logic into transformations, and the severe financial impact of downtime or errors contribute heavily to the alarming 30-80% rates of budget overruns, schedule delays, and outright project failures [1, 2, 3]. These costs are not abstract; they represent real financial pain in terms of wasted effort, delayed revenue, and damaged client relationships.

However, achieving cost-effective, scalable, and predictable data migration is not an insurmountable challenge. It requires a fundamental shift away from reactive firefighting towards proactive, data-informed planning and execution, critically enabled by modern technology. For implementation teams, data onboarding specialists, and consultants dealing with the intricacies of file-based data and complex business rules, success hinges on:

- Quantifying Ruthlessly: Moving beyond assumptions to accurately assess data volume, complexity, quality, and the true scope of transformation and validation requirements before committing to timelines and budgets. Understanding the cost drivers detailed in this analysis is the first step.

- Strategic Tooling Investment: Selecting and mastering modern data transformation platforms, ETL tools, or software data migration solutions that provide efficiency through automation (AI data mapping, reusable logic), flexibility for diverse data and complex logic (visual builders + code), robust integrated data validation automation, and seamless connectivity. The right data onboarding solution or data import tool becomes a force multiplier, directly mitigating the quantified costs of manual effort, errors, and delays.

- Methodical & Iterative Execution: Adopting a suitable data migration methodology (often tool-assisted iterative for onboarding), emphasizing continuous testing and validation throughout the data migration process, and leveraging tools that support efficient, low-risk deployment strategies.

- Minimizing Business Disruption: Prioritizing approaches and technologies that drastically reduce or eliminate costly operational downtime and accelerate the delivery of business value through faster, more reliable data availability.

By embracing a quantitative understanding of the true costs outlined here, rigorously applying best practices in planning and execution, and strategically leveraging the capabilities of appropriate, modern best migration software, organizations and their implementation partners can navigate the inherent complexities. They can transform data migration from a high-stakes gamble plagued by hidden costs into a predictable, efficient, and value-driving engine for strategic change and successful client onboarding.

Works Cited (Referenced in this Analysis)

- Why data migration projects fail: Common causes and effective ..., accessed April 22, 2025, https://www.confiz.com/blog/why-data-migration-projects-fail-common-causes-and-effective-solutions/

- 4 Types of Data Migration, Risks and Process - SyncMatters, accessed April 22, 2025, https://syncmatters.com/blog/4-types-of-data-migration (Note: Also cites Gartner & Bloor Group findings)

- Top 10 Data Migration Challenges in 2025 (Solutions Added) - Forbytes, accessed April 22, 2025, https://forbytes.com/blog/common-data-migration-challenges/ (Note: Also cites Oracle findings)

- Data migration cost in 2025: An overview to key parameters - Kellton, accessed April 22, 2025, https://www.kellton.com/kellton-tech-blog/data-migration-cost

- The Hidden Costs of Legacy Systems: Migrate Now, Save Later ..., accessed April 22, 2025, https://www.alation.com/blog/hidden-costs-legacy-systems-migrate-now/

- Delayed Opportunity Costs and Pricing Software: What Do You Have to Lose? - Canidium, accessed April 22, 2025, https://www.canidium.com/blog/delayed-opportunity-costs-and-pricing-software?hsLang=en

- Data Migration: Definition, Strategy and Tools | Informatica, accessed April 22, 2025, https://www.informatica.com/resources/articles/data-migration-definition-strategy-and-tools.html

- Big Bang vs Trickle Migration [Key Differences & Considerations], accessed April 22, 2025, https://brainhub.eu/library/big-bang-migration-vs-trickle-migration

- What is Data Migration? Strategy & Best Practices - Qlik, accessed April 22, 2025, https://www.qlik.com/us/data-migration

- Data Migration Challenges: Strategies for a Smooth Transition - Astera Software, accessed April 22, 2025, https://www.astera.com/type/blog/data-migration-challenges/

- Data Migration Challenges: How to Ensure a Smooth Transition - Hopp Tech, accessed April 22, 2025, https://hopp.tech/resources/data-migration-blog/data-migration-challenges/

- 10 Common Data Migration Challenges and How To Overcome Them - Cloudficient, accessed April 22, 2025, https://www.cloudficient.com/blog/10-common-data-migration-challenges-and-how-to-overcome-them

- 9 Common Data Migration Challenges and How to Mitigate Them - Tredence, accessed April 22, 2025, https://www.tredence.com/blog/data-migration-challenges (Note: Cites McKinsey cost increase)

- 12 Challenges in Financial Data Migration and How IT leaders Tackle Them, accessed April 22, 2025, https://dataladder.com/12-challenges-in-financial-data-migration-and-how-it-leaders-tackle-them/

- DELOITTE'S MIGRATION FACTORY FOR THE DATABRICKS DATA INTELLIGENCE PLATFORM, accessed April 22, 2025, https://www2.deloitte.com/content/dam/Deloitte/us/Documents/consulting/databricks-migrationfactory-brick-builder-salessheet_202410.pdf

- Data Platform Migration: proven strategies and cost analysis - Kellton, accessed April 22, 2025, https://www.kellton.com/kellton-tech-blog/data-platform-migration-strategies

- Data Migration: Definition, Strategy and Tools | Informatica (Refers to phased migration downtime, duplicate of 12)

- Data Migration Strategy Guide | Pure Storage, accessed April 22, 2025, https://www.purestorage.com/au/knowledge/data-migration-strategy-guide.html

- A quick guide to data migration: best strategies for post-merger success - Transcenda, accessed April 22, 2025, https://www.transcenda.com/insights/a-quick-guide-to-data-migration-best-strategies-for-post-merger-success

- A six-stage process for data migration - ETL Solutions, accessed April 22, 2025, https://etlsolutions.com/a-six-stage-process-for-data-migration/

- DATA MIGRATION FOR PROJECT LEADERS - A Guidebook - SAS, accessed April 22, 2025, https://www.sas.com/content/dam/SAS/bp_de/doc/whitepaper1/ba-wp-data-migration-for-project-leaders-a-guidebook-2006948.pdf

- Data migration in 4 stages - XTEP, accessed April 22, 2025, https://www.xtep.fr/en/data-migration-in-4-stages-data-migration/

- Understanding Cloud Migration Costs: A Comprehensive Guide, accessed April 22, 2025, https://www.missioncloud.com/blog/understanding-cloud-migration-costs-a-comprehensive-guide

- 12 Challenges in Financial Data Migration and How IT leaders Tackle Them (Refers to security, duplicate of 22)

- Blog: The True Cost of Downtime - Vcinity, accessed April 22, 2025, https://vcinity.io/news/blog-the-true-cost-of-downtime/

- Calculating the cost of downtime | Atlassian, accessed April 22, 2025, https://www.atlassian.com/incident-management/kpis/cost-of-downtime

- What Keeping Your Legacy Systems is Really Costing Your Business - StratusGrid, accessed April 22, 2025, https://stratusgrid.com/blog/keeping-legacy-systems-is-costing-you-money

- Understanding Implementation Fees: What They Are and Why They ..., accessed April 22, 2025, https://itexus.com/understanding-implementation-fees-what-they-are-and-why-they-matter/

- The Cost of Maintaining Legacy Systems for Your Organization - Cloudficient, accessed April 22, 2025, https://www.cloudficient.com/blog/the-cost-of-maintaining-legacy-systems-for-your-organization

- Understanding ERP Project Cost | Blog - Ultra Consultants, accessed April 22, 2025, https://ultraconsultants.com/erp-software-blog/how-much-does-it-cost-to-implement-an-erp/

- How Much Does It Cost to Migrate Legacy System to Modern Platforms - Codzgarage, accessed April 22, 2025, https://www.codzgarage.com/blog/cost-to-migrate-legacy-system/

- What are the hidden costs of maintaining legacy systems? - RecordPoint, accessed April 22, 2025, https://www.recordpoint.com/blog/maintaining-legacy-systems-costs

- A Big Bang Implementation Vs A Phased ERP Implementation - Panorama Consulting, accessed April 22, 2025, https://www.panorama-consulting.com/big-bang-implementation/

- Reducing Costs and Risks for Data Migrations - Hitachi Vantara, accessed April 22, 2025, https://www.hitachivantara.com/go/cost-efficiency/pdf/white-paper-reducing-costs-and-risks-for-data-migrations.pdf

- Cost of Delay: How to Calculate the Cost of Postponing or Missing an Opportunity, accessed April 22, 2025, https://fastercapital.com/content/Cost-of-Delay--How-to-Calculate-the-Cost-of-Postponing-or-Missing-an-Opportunity.html

- Cost of Delay: How to Measure the Opportunity Cost of Postponing a Decision or Action, accessed April 22, 2025, https://fastercapital.com/content/Cost-of-Delay--How-to-Measure-the-Opportunity-Cost-of-Postponing-a-Decision-or-Action.html

- Data Migration Service Packages - Cart2Cart, accessed April 22, 2025, https://www.shopping-cart-migration.com/provided-services/data-migration-service-packages

The visual data transformation platform that lets implementation teams deliver faster, without writing code.

Start mappingNewsletter

Get the latest updates on product features and implementation best practices.