ETL vs Importer vs Platform: Which Data Tool Should You Choose?

ETL vs. Importer vs. Advanced Platform: Which Data Migration Tool is Right for You?

Once you understand the different categories of data tools available (as outlined in our Data Transformation Tools Landscape Guide), the next critical step is choosing the right type for your specific implementation needs. Comparing ETL vs. basic importers vs. advanced platforms can be challenging, especially when dealing with complex client files (CSV, Excel, JSON). This post provides a practical data migration tool selection framework to help you decide which approach best fits your requirements, avoiding costly mismatches.

This post provides a practical data migration tool selection framework to help you determine when to use ETL vs. import tools versus an advanced data onboarding platform type. Building on our Data Transformation Tools Guide (which outlines the categories), this article focuses specifically on the decision criteria. We'll help you select the best fit for your file complexity, transformation logic, and validation needs, guiding you towards the essential features detailed in our Capabilities Checklist.

Scenario 1: When is Traditional ETL the Right Choice?

Traditional ETL (Extract, Transform, Load) platforms are the established powerhouses for large-scale, ongoing data integration between stable, internal systems.

Choose ETL if your primary need involves:

- System-to-System Integration: Connecting internal databases (SQL Server, Oracle), data warehouses, CRMs (Salesforce), and ERPs (NetSuite) for regular synchronization.

- Data Warehousing: Building and maintaining large historical data repositories for business intelligence and reporting.

- High-Volume, Structured Data: Processing massive, predictable datasets flowing between well-defined internal schemas.

ETL Use Cases: Nightly sales data aggregation from CRM to a data warehouse; synchronizing product catalogs between ERP and e-commerce platforms.

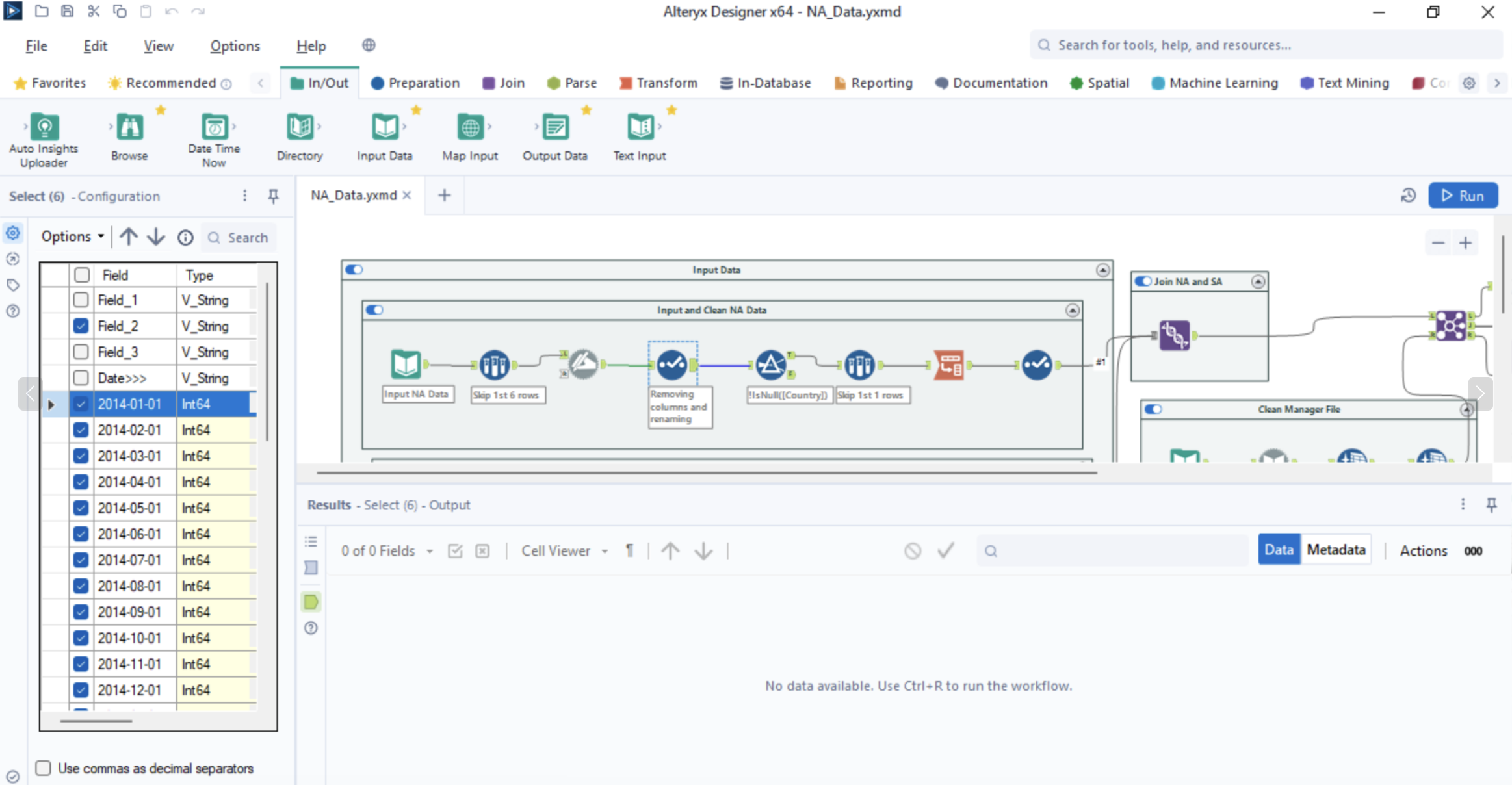

Typical node-based interface of a traditional ETL tool, often requiring specialized skills.

Typical node-based interface of a traditional ETL tool, often requiring specialized skills.

Limitations for File-Based Onboarding/Migration:

- File Handling Rigidity: While capable, configuring ETL for the unique structure and inconsistencies of each client's CSV or Excel file is often cumbersome and slow. They lack agility for diverse, ad-hoc file formats.

- Complexity & Cost: Require significant setup, specialized ETL developers, ongoing maintenance, and often substantial licensing fees – frequently overkill for project-based file transformations. Migration costs can escalate quickly.

- Slow Iteration: The development cycle for handling unique file logic can be lengthy, hindering rapid onboarding.

Bottom Line: ETL is powerful for internal system integration but often too rigid, complex, and costly for the specific demands of varied, file-based client data onboarding and migration projects.

Scenario 2: When Do Basic Data Import Tools Suffice?

Tools like Flatfile or OneSchema focus on simplifying the initial file upload experience, particularly for end-users.

Choose Basic Importers if your primary need involves:

- End-User Uploads: Providing a simple interface for non-technical users to upload basic CSV or Excel files directly into a SaaS application.

- Simple Column Mapping: Matching source columns to target fields directly (e.g., 'Email Address' to 'email').

- Basic Validation: Catching obvious errors like missing required fields, incorrect data types (text vs. number), or basic format checks (e.g., simple email pattern).

Importer Use Cases: Users uploading a list of contacts into a CRM; customers importing simple product lists.

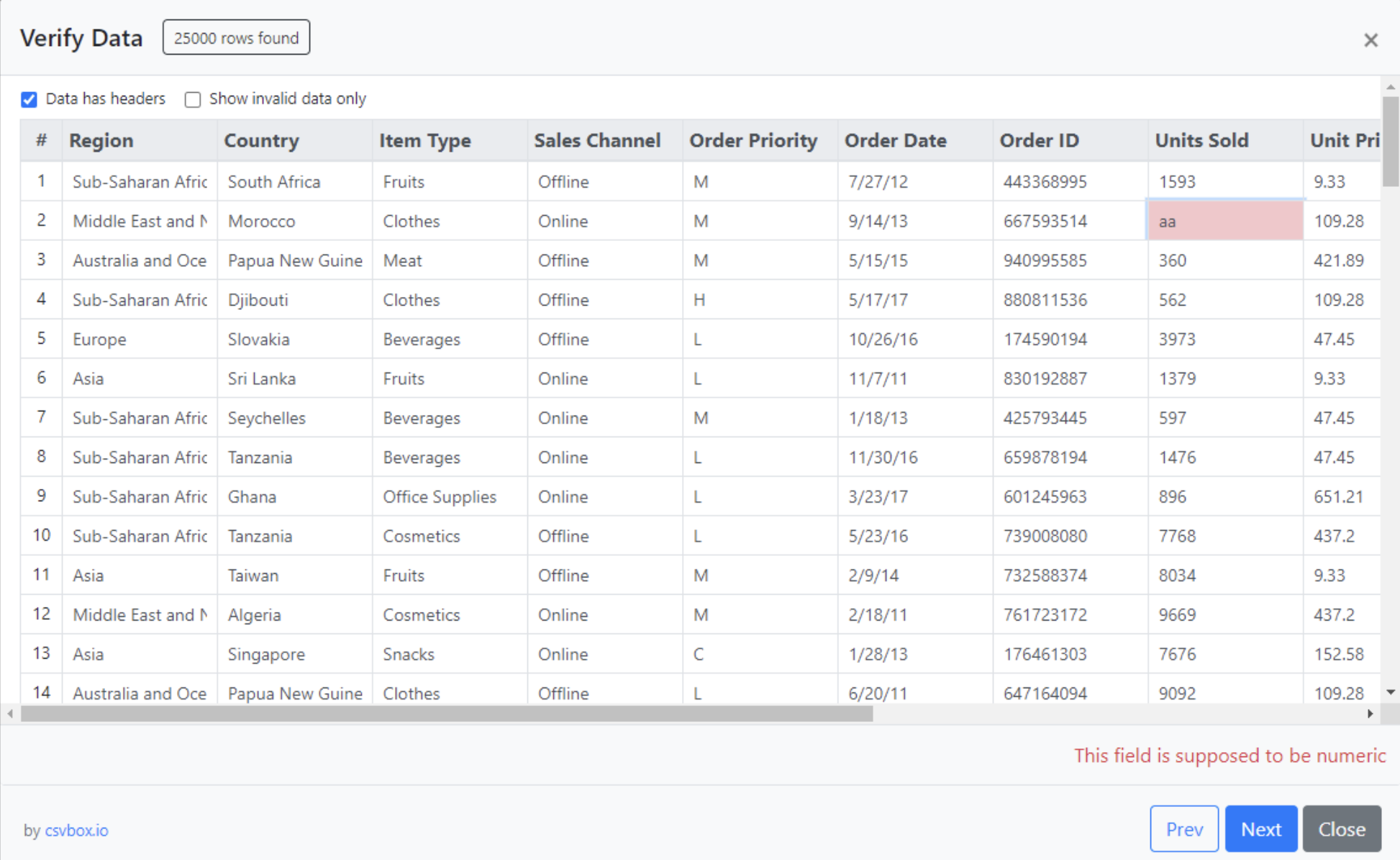

Example of a simple CSV importer interface, focused on basic mapping and validation.

Example of a simple CSV importer interface, focused on basic mapping and validation.

Limitations for Complex Implementation Needs:

- Minimal Transformation Power: This is the critical data import tool limitation. They cannot handle complex business logic (e.g., conditional calculations, data restructuring, lookups). If you need more than simple mapping, they fall short.

- Superficial Validation: Validation is typically restricted to basic checks. They cannot enforce complex business rules (e.g., "Ensure sum of splits equals 100%" or "Verify Product SKU exists via API lookup"). See our guide on mastering data validation for what's often required.

- Lack of Reusability for Complex Logic: While basic templates might exist, they aren't designed for managing and reusing the intricate, version-controlled transformation and validation logic needed by implementation teams handling diverse clients. They are not a Flatfile alternative for complex logic or a OneSchema alternative for transformations when sophisticated rules are required.

Bottom Line: Basic importers improve the simple upload experience but lack the transformation and validation depth required for most implementation-led data migration and onboarding projects involving business logic. Relying on them for complex tasks often leads back to the spreadsheet trap.

Scenario 3: The Case for Advanced Data Transformation Platforms

This category bridges the gap between rigid ETL and simplistic importers, offering ETL-like power specifically tailored for the complexities of file-based data transformation, migration, and validation by implementation teams. DataFlowMapper is a prime example of this advanced data onboarding platform type.

Choose an Advanced Platform if your primary need involves:

- Complex File-Based Transformations: Handling intricate logic, calculations, conditional flows, and data restructuring (e.g., CSV-to-nested-JSON) within diverse CSV, Excel, and JSON files.

- Implementation/Migration Team Empowerment: Providing tools directly usable by implementation specialists, data analysts, and onboarding managers, not just developers.

- Advanced Business Rule Validation: Enforcing complex validation rules beyond basic checks, often involving lookups or custom logic, with clear error feedback. You need capabilities beyond basic checks.

- Repeatable, Reusable Logic: Creating, saving, versioning, and reusing complex mapping and transformation templates across multiple clients and projects. This is crucial for building software to build reusable data validation/import templates and enabling a proactive onboarding approach.

- Data Enrichment & Connectivity: Performing lookups against external APIs or databases during transformation (e.g., using DataFlowMapper's 'RemoteLookup') and potentially delivering cleaned data to target systems.

- Agility & Speed: Rapidly configuring transformations for unique client files without lengthy development cycles, often accelerated by AI features.

Advanced Platform Use Cases: Migrating complex financial transaction data requiring code translation and calculated fields; onboarding healthcare patient records needing validation against external master lists via API; transforming varied client product catalogs into a standardized JSON format for an e-commerce platform.

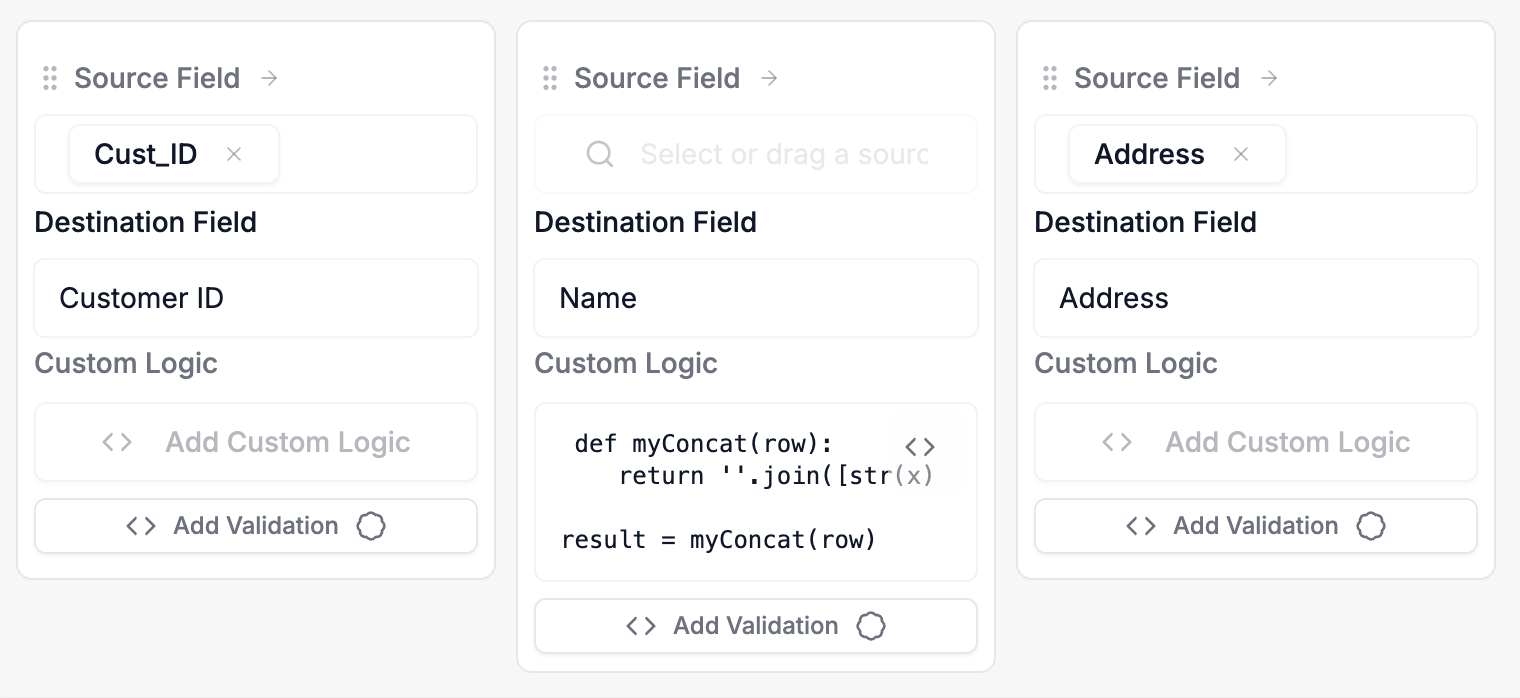

Example of an advanced platform interface (like DataFlowMapper) combining visual mapping with powerful logic builders.

Example of an advanced platform interface (like DataFlowMapper) combining visual mapping with powerful logic builders.

Why Choose This Category? Key Capabilities Driving the Decision:

- Versatile File Handling: Natively parses complex CSVs (various delimiters, headers), multi-sheet Excel, and nested JSON.

- Powerful & Accessible Logic: Combines intuitive visual interfaces (like spreadsheet-style mapping) with no-code/low-code builders and integrated code editors (e.g., Python) for ultimate flexibility.

- Robust Validation Engine: Allows defining custom business rules using the same logic engine, providing actionable, cell-level error feedback.

- AI Assistance: Features like suggested mappings, AI-driven logic generation from plain English, and full mapping orchestration significantly accelerate setup.

- Integrated Workflow: Designed around the implementation lifecycle: Upload -> Map/Logic -> Transform -> Validate -> Review -> Deliver. For a full list of essential features, see our capabilities checklist.

Bottom Line: Advanced platforms provide the necessary power and flexibility for implementation teams tackling complex, file-based data transformations and validations, offering a more efficient and suitable alternative to traditional ETL or basic importers for these specific ETL alternatives for data import scenarios.

The Decision Framework: Which Tool Category Fits Your Needs?

Choosing the right data onboarding platform type requires evaluating your specific context. Ask these key questions to guide your selection using this data migration tool selection framework:

- Primary Challenge: Are you integrating stable internal systems (→ ETL) or processing varied external client files (→ Basic Importer for simple cases, Advanced Platform for complexity)?

- Transformation Complexity: Is it just simple column mapping (→ Basic Importer) or does it involve complex calculations, conditional logic, restructuring, and business rules (→ Advanced Platform)?

- File Variability & Complexity: Are incoming files consistent and simple (→ Basic Importer might work) or diverse, complex (nested structures, multiple sheets), and inconsistent (→ Advanced Platform)?

- Need for Reusable Logic: Is it a one-off task, or do you need to save, manage, version, and reuse complex transformation and validation logic across many clients/projects (→ Advanced Platform)?

- Primary User: Are dedicated ETL developers available (→ ETL), or do implementation specialists/analysts need to manage the process (→ Basic Importer for simple tasks, Advanced Platform for complex ones)?

- Validation Depth: Are basic format checks enough (→ Basic Importer), or do you need to enforce complex business rules and perform external lookups (→ Advanced Platform)?

- Data Enrichment Required? Do you need to look up data from APIs or databases during the transformation process (→ Advanced Platform)?

- Agility vs. Overhead: Do you need rapid configuration for diverse files with moderate cost (→ Advanced Platform), or can you afford the time/cost/complexity of enterprise integration (→ ETL)?

Comparison Summary: Finding Your Fit

| Factor | Traditional ETL | Basic Importer | Advanced Platform (e.g., DataFlowMapper) |

|---|---|---|---|

| Best For | Internal System Integration, Data Warehousing | Simple End-User File Uploads | Complex File Transformation & Validation by Impl. Teams |

| Handles Complex Logic? | Yes (Code/Config Heavy) | No / Very Limited | Yes (Visual + Code) |

| Handles Complex Validation? | Yes (Often Coded) | No (Basic Checks Only) | Yes (Custom Rules, Lookups) |

| Reusable Complex Templates? | Yes (Complex Management) | No / Limited | Yes (Core Feature) |

| Optimized for Varied Files? | No | Partially (Simple Only) | Yes |

| Primary User | ETL Developers | End-Users / Ops | Implementation / Data Teams |

Conclusion: Making the Right Choice for Your Implementation Needs

Choosing the right data migration platform type isn't just about features; it's about aligning the tool's core strengths with your team's primary challenges. While traditional ETL excels at internal integration and basic importers simplify end-user uploads, Advanced Data Transformation Platforms are specifically designed to handle the complex file transformations and validations common in implementation and migration projects. This category often provides the best ETL alternatives for data import scenarios involving intricate business logic.

Don't get stuck with inefficient scripts or tools that fall short. Use the decision framework questions outlined above to analyze your specific needs:

- What is your primary challenge (internal systems vs. external files)?

- How complex is your transformation logic?

- How varied and complex are your input files?

- Do you need reusable, versioned logic?

- Who are the primary users (developers vs. implementation specialists)?

- What level of validation depth is required?

- Is data enrichment during transformation necessary?

- What is your tolerance for agility versus overhead?

By using this data migration tool selection framework and carefully considering factors like transformation complexity, file variability, and validation depth, you can confidently determine when to use ETL vs. import tools versus an Advanced Platform like DataFlowMapper. Making the right choice at the category level transforms complex file-based data onboarding from a bottleneck into a streamlined process, often yielding significant ROI by reducing manual effort and errors. Once you've identified the best category, use our capabilities checklist to evaluate specific tools. Refer back to our capabilities checklist when evaluating specific tools within your chosen category.

The visual data transformation platform that lets implementation teams deliver faster, without writing code.

Start mappingNewsletter

Get the latest updates on product features and implementation best practices.