Data Transformation Tools Guide: Understanding Categories for Implementation Teams

Mapping the Terrain: Understanding Data Transformation Tool Categories for Implementation Success

For teams managing software imtplementations, data migrations, or complex customer onboarding projects, data transformation isn't just a task – it's the critical bridge between raw client data and a successful go-live. You receive diverse client files – often messy CSVs, intricate Excel spreadsheets, or nested JSON – and must clean, validate, and reshape them for your target system. For teams managing software implementations, data migrations, or complex customer onboarding projects, data transformation isn't just a task – it's the critical bridge between raw client data and a successful go-live. You receive diverse client files – often messy CSVs, intricate Excel spreadsheets, or nested JSON – and must clean, validate, and reshape them for your target system. This demands more than simple mapping; it requires tools capable of handling complex business logic, varied formats, and rigorous data validation. Choosing the right approach is paramount.

The challenge? The market offers a vast array of options, making it difficult to know where to start. Selecting an inappropriate tool leads to fragile custom scripts, endless manual data transformation in spreadsheets (Excel data wrangling that drains resources), project delays, and compromised data quality. This data transformation tools guide aims to clarify the landscape, helping implementation and onboarding teams understand the distinct categories of tools available.

Why focus on categories first? Because grasping the core purpose, strengths, and inherent limitations of each tool category is the foundational knowledge needed before you start comparing specific vendors or diving into detailed feature lists. This data transformation tools guide provides that essential overview of data migration software categories, helping you understand the fundamental differences in approach across the data transformation tools landscape. Once you understand the types, you'll be better equipped to decide which category best suits your needs (which we explore further in our ETL vs. Importer vs. Platform comparison guide).

Why Understanding Tool Categories Matters for Implementation Teams

Before exploring the types, let's solidify why this foundational knowledge is crucial:

- Client Onboarding Experience: Efficient transformation minimizes errors during the vital customer data onboarding process.

- Migration Risk & Cost Mitigation: Identifying issues before loading prevents costly rework. Understanding migration costs is key.

- Handling Data Complexity: Implementation data requires tools adept at handling diverse formats (CSV, Excel, JSON) and client-specific rules.

- Scalability & Repeatability: Effective teams need tools supporting reusable logic and templates for consistency, enabling proactive, standardized onboarding.

- Ensuring Data Quality: The goal is accurate, validated data fit for the target system, achieved through robust cleansing and validation processes.

Without tools suited to these needs, teams often default to inefficient methods like spreadsheets or custom scripts, creating bottlenecks and risks. The hidden costs of the spreadsheet trap are significant.

The Landscape of Data Transformation Tools: A Category Overview

Let's survey the major categories, focusing on their suitability for implementation teams dealing primarily with file-based client data (CSV, Excel, JSON). This data transformation tools guide provides the map to navigate the options.

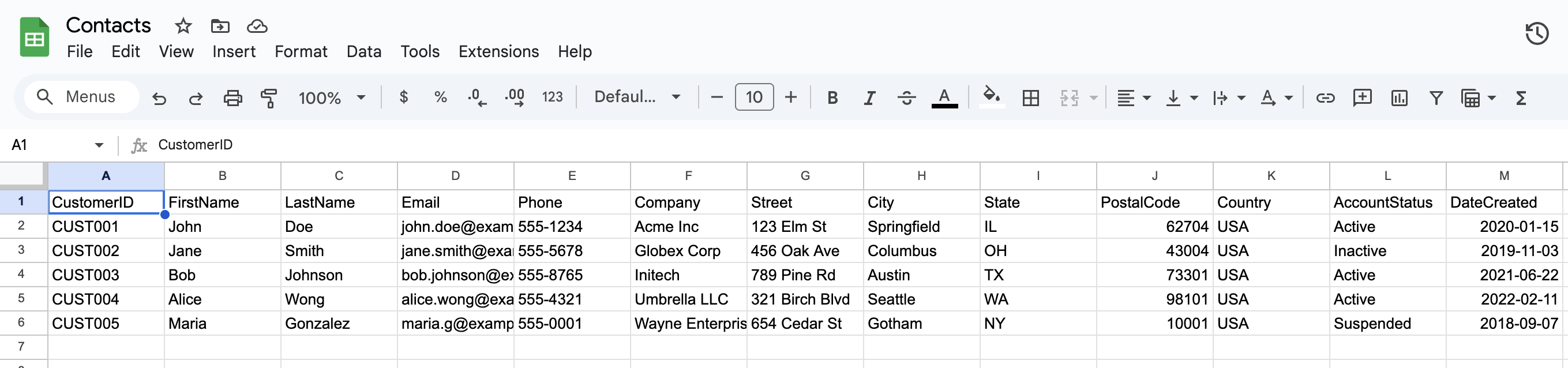

Category 1: Spreadsheets (The Manual Starting Point)

Often the default starting point due to familiarity, but limitations quickly surface.

- Core Function: Manual data viewing and manipulation using built-in formulas (VLOOKUP, INDEX/MATCH), pivot tables, and potentially basic scripting (VBA, Google Apps Script).

- Illustrative Examples: Microsoft Excel, Google Sheets.

- Characteristics for Implementation Teams:

- Accessibility: Widely available and familiar interface.

- Limitations: Prone to errors due to manual processes; scalability issues with large files; lacks robust auditability/versioning; limited power for complex logic, restructuring, or validation beyond basic formats. Often inadequate for typical implementation data complexity and volume.

Category 2: Enterprise ETL/ELT Platforms (The Heavy-Duty Integrators)

These are the heavy-duty data integration platforms designed for large-scale, continuous data flows, primarily between major internal enterprise systems and data warehouses. While powerful and often listed among ETL tools for data migration, they come with caveats for typical implementation team workflows focused on external files.

- Core Function: Extract data from diverse sources, apply complex transformations using a dedicated engine (ETL) or within a target data warehouse (ELT), and load into target systems. Built for high-volume data integration, governance, and operational stability.

- Illustrative Examples: Informatica PowerCenter/IDMC, Talend (Qlik), IBM DataStage, Microsoft SSIS/Azure Data Factory, Fivetran (primarily EL), Stitch (Talend).

- Characteristics for Implementation Teams:

- Capabilities: Possess powerful transformation engines, scheduling, monitoring, error handling, and data governance features.

- Considerations: Typically involve high complexity and cost (licensing, infrastructure, specialized developers); configuration can be rigid and slow for iterating on unique client file requirements; core design is often optimized for stable internal sources rather than variable external client files. Generally suited for large-scale, continuous internal data flows.

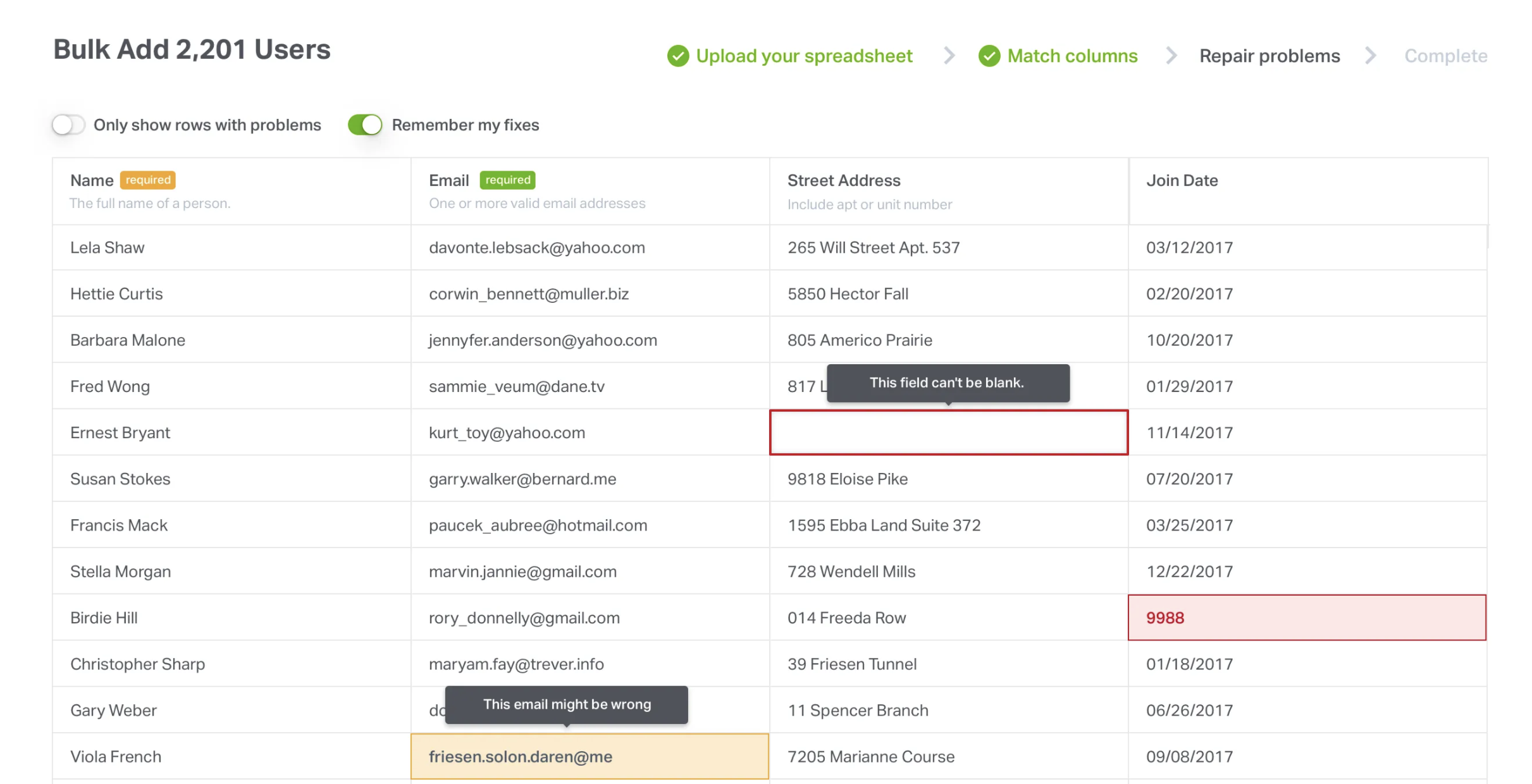

Category 3: Simple Data Cleaners & Importers (The End-User Upload Helpers)

This category focuses on simplifying the end-user's CSV import experience when uploading files into a SaaS application, performing basic checks upfront. Think of them as basic data onboarding tools or simple data cleaning tools focused purely on the initial upload.

- Core Function: Provide user-friendly interfaces (often embeddable widgets) for file uploads, basic column mapping suggestions, and simple validation (e.g., required fields, email format). The primary goal is easy data ingestion by the customer, improving their end-user upload experience.

- Illustrative Examples: Flatfile, OneSchema, CSVBox, UseCSV, Dromo.

- Characteristics for Implementation Teams:

- Strengths: Offer excellent UI/UX for the end-user uploading data; enable quick setup for basic import scenarios; catch simple format errors effectively during upload.

- Limitations: Possess severely limited transformation logic capabilities (cannot typically handle complex business rules, restructuring, or lookups required post-upload by implementation teams); validation is usually basic; focus is on the initial ingestion event, not the broader implementation transformation workflow.

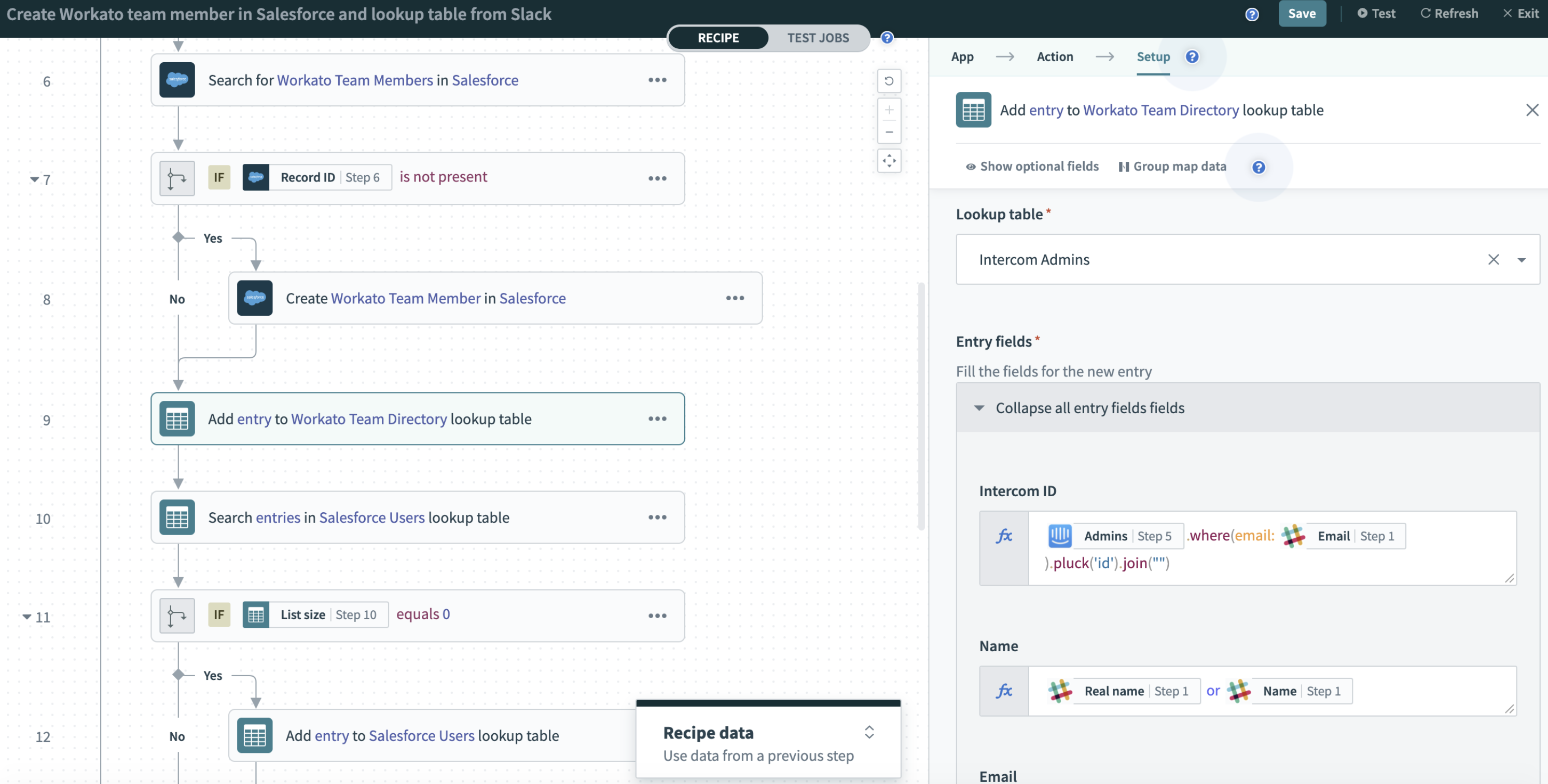

Category 4: Workflow Automation Platforms (iPaaS - The App Connectors)

These platforms, often called iPaaS (Integration Platform as a Service), excel at connecting cloud apps via APIs to automate tasks and pass data points between them. They are powerful API integration tools for workflow automation.

- Core Function: Trigger actions in one application based on events in another (e.g., new CRM lead triggers marketing email). Allow for simple data manipulation on individual data items flowing between connected APIs.

- Illustrative Examples: Zapier, Make (Integromat), Tray.io, Workato, MuleSoft Anypoint Platform (also strong ETL).

- Characteristics for Implementation Teams:

- Strengths: Excel at API-to-API integration; offer vast libraries of pre-built connectors; relatively easy setup for simple, linear task automation between cloud apps.

- Limitations: Not designed for processing or transforming entire datasets within files; data handling is typically limited to individual records within an API-driven workflow; file processing is usually not the core focus. Generally unsuitable for the primary task of transforming and validating client data files.

Category 5: Custom Scripts (The DIY Power & Pitfalls)

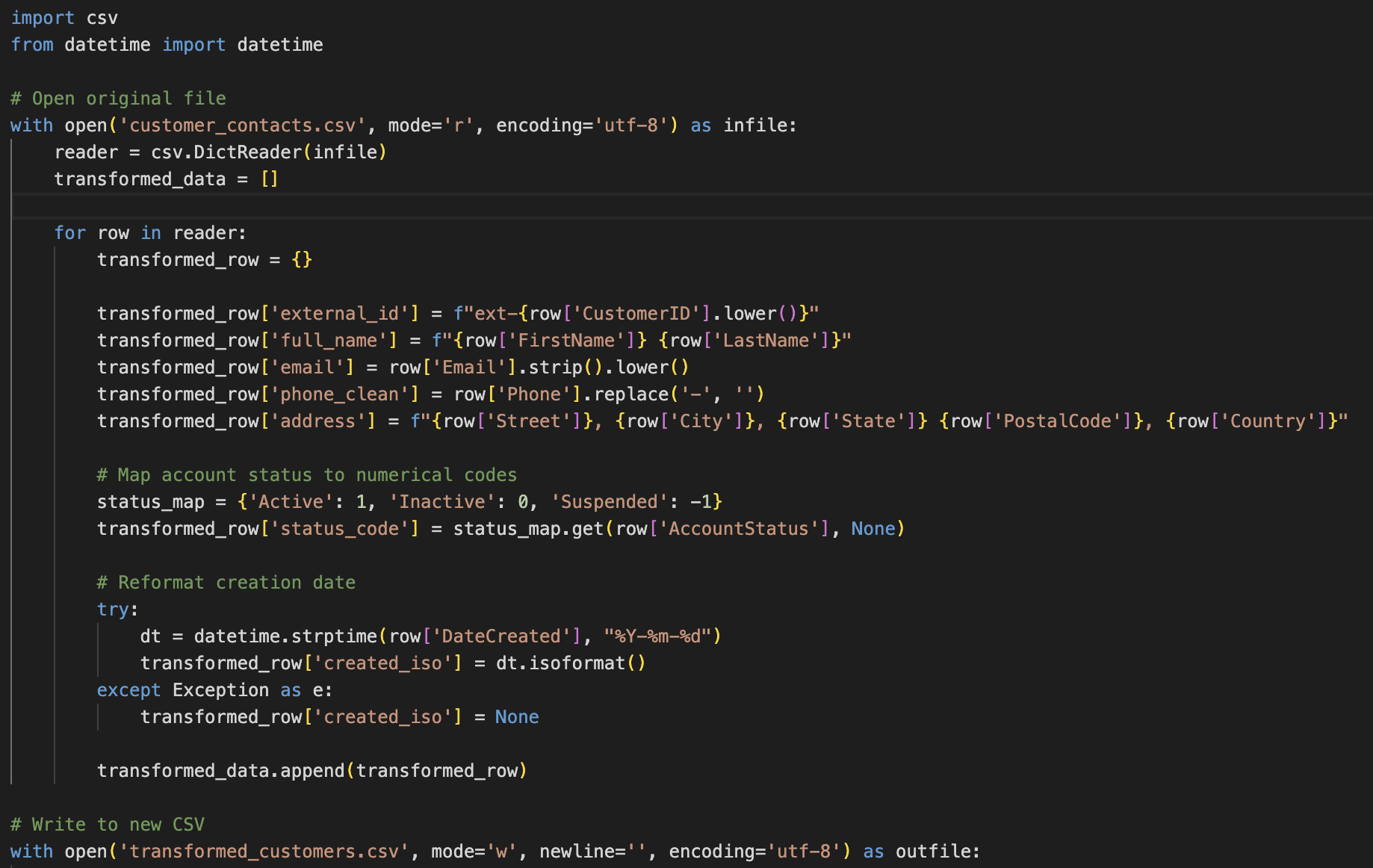

Developing a bespoke solution using programming languages like Python or SQL offers maximum control but comes with significant overhead. While powerful for Python data transformation using libraries like Pandas, or performing SQL data transformation, this approach has major drawbacks for implementation teams. Many teams seek Python alternatives for data migration specifically to avoid these issues.

- Core Function: Writing code (e.g., Python data transformation with Pandas, SQL data transformation) to perform any conceivable data manipulation, transformation, or validation logic, resulting in custom data migration scripts.

- Characteristics for Implementation Teams:

- Capabilities: Offer ultimate flexibility to handle any edge case; leverage powerful open-source libraries; can integrate into existing development workflows. No direct software license fees.

- Considerations: Require specialized programming skills (creating potential developer dependency); can incur high maintenance burdens (complexity, documentation, "knowledge silos"); typically lack a UI for non-coders (visual mapping, interactive validation); iteration and collaboration can be slower compared to visual tools. Reliance on scripting often leads to reactive chaos.

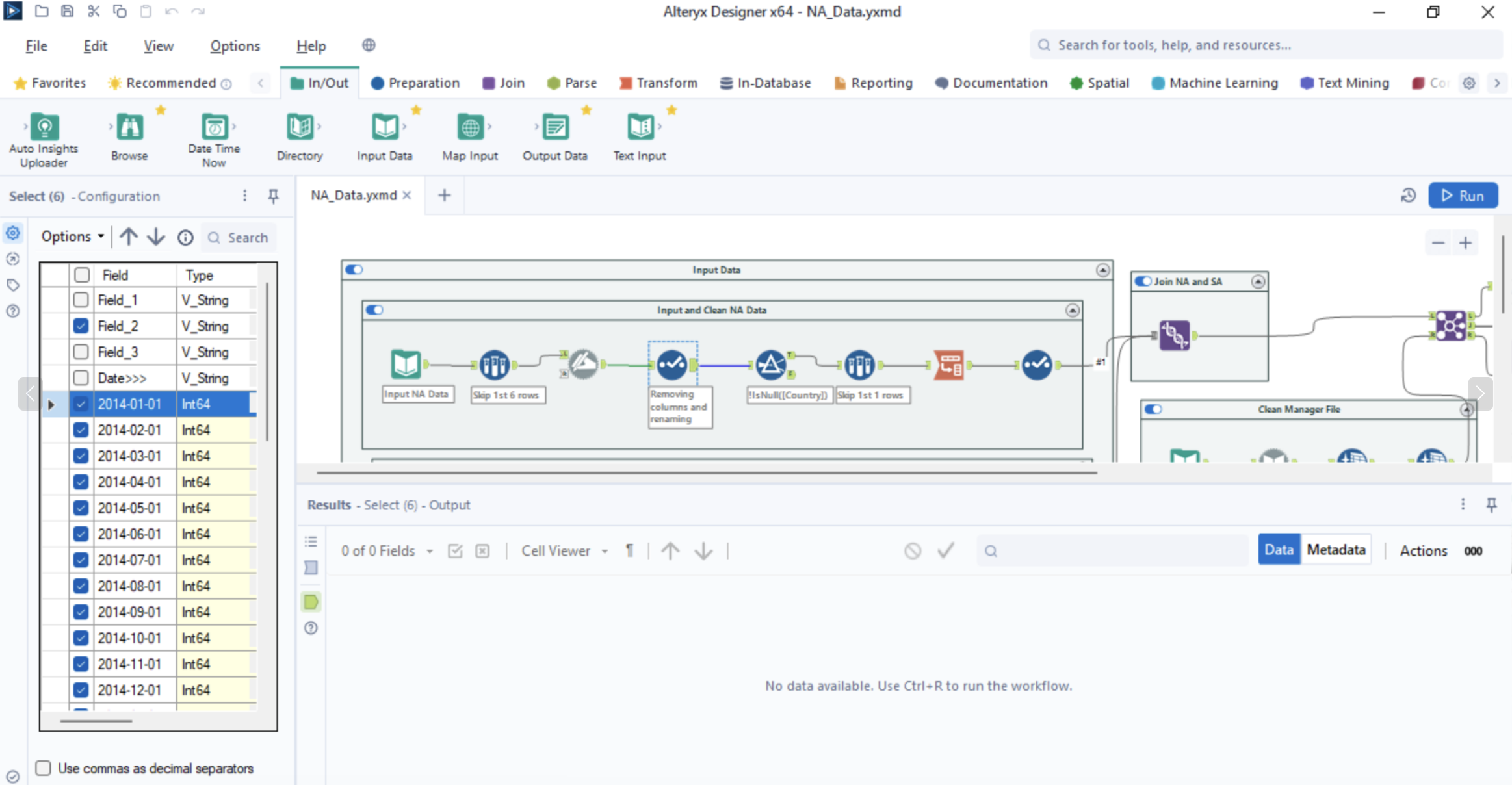

Category 6: Modern Data Transformation & Mapping Platforms (The Implementation Sweet Spot)

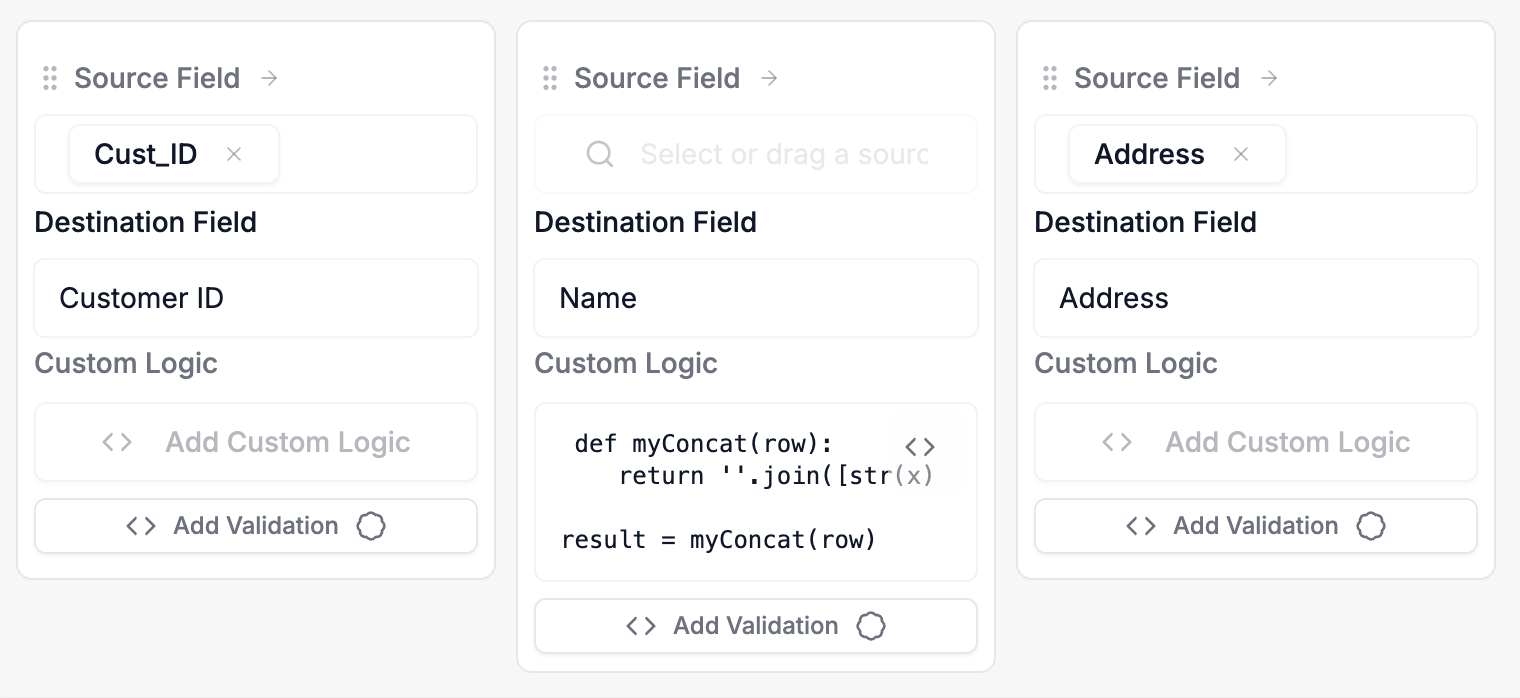

This evolving category represents a new breed of modern data transformation solutions, specifically addressing the gap between simpler tools and enterprise ETL. They focus directly on the needs of implementation, migration, and onboarding teams working with files, often marketed as user-friendly data transformation tools or no-code/low-code data transformation platforms. They serve as powerful Flatfile alternatives for complex logic or OneSchema alternatives for transformations.

- Core Function: Provide a dedicated environment – often combining visual data mapping interfaces with code options – designed for defining, executing, validating, and managing complex, repeatable transformations on file-based data (CSV, Excel, JSON). Key features often include API/DB connectivity for lookups/delivery and integrated AI data mapping tools. Many provide intuitive data transformation tools with visual mapping interfaces.

- Illustrative Examples: DataFlowMapper (purpose-built for implementation teams), Alteryx Designer/Designer Cloud, SnapLogic (also iPaaS), EasyMorph, KNIME (open-source option). Combinations like Airbyte + dbt require more technical setup for file-based workflows outside a warehouse and lack the integrated visual focus.

- Characteristics for Implementation Teams:

- Capabilities: Offer powerful yet accessible logic (often combining visual and code-based approaches); feature advanced, configurable validation engines; frequently include AI assistance (mapping, logic generation); optimized for handling complex file types (CSV, Excel, JSON); designed with features for reusability and standardization; empower a wider range of team members (specialists, analysts, developers).

- Considerations: May involve a learning curve (varying by platform); flexibility might not cover every extreme edge case achievable only with pure custom code; typically involve software subscription costs (often evaluated against potential ROI from efficiency gains and error reduction).

Understanding the Tool Categories: Your Foundational Step

This data transformation tools guide has mapped the essential categories available in the current data transformation tools landscape. Recognizing that each category (from spreadsheets to advanced platforms) serves different core purposes is the vital first step for implementation teams navigating file-based client data onboarding. Understanding these fundamental differences in approach, strengths, and weaknesses is crucial before attempting to select a specific solution.

With this foundational knowledge of data migration software categories and a clear data onboarding tools overview, you're better equipped to assess which type of tool truly aligns with your team's unique challenges and goals.

Where to Go From Here?

Understanding the landscape is the essential first step. Now that you grasp the categories, the next logical questions are:

- Which category best fits my specific needs and constraints? If you're weighing the pros and cons of the most common choices for implementation work, our ETL vs. Importer vs. Advanced Platform comparison guide provides a direct decision-making framework.

- Once I've identified a likely category, what specific features should I look for in a tool? To ensure you select a capable solution, explore our checklist in the 6 Must-Have Capabilities for Data Migration Software guide.

Frequently Asked Questions (FAQ) - Data Transformation Tool Categories

- Q: Can't I just use Python/Pandas for everything?

- A: You can, but consider the total cost of ownership: developer dependency, maintenance challenges ("knowledge silos"), lack of UI for mapping/validation feedback, slower iteration, and difficulty for non-coders. Modern platforms often offer Python's power within a more manageable, collaborative, visual framework, serving as effective Python alternatives for data migration workflows.

- Q: Are Modern Platforms just lightweight ETL tools?

- A: Their focus differs. They are optimized for processing diverse files (CSV/Excel/JSON) common in client onboarding, featuring accessible visual logic builders, advanced validation engines, AI assistance, and rapid iteration, often without the heavy infrastructure of enterprise ETL tools. See our ETL vs Importer vs Advanced Platform comparison.

- Q: How secure are Modern Platforms with client data?

- A: Reputable platforms prioritize security (encryption, access controls, audits, compliance like SOC 2). Always review vendor documentation. DataFlowMapper prioritizes security (see our Security Page).

- Q: Can these tools handle large files?

- A: Capabilities vary, but modern platforms generally handle typical implementation file sizes (millions of rows, GBs), exceeding spreadsheet limits. Check specific platform specs.

- Q: How does AI actually help in data mapping and transformation?

- A: AI accelerates mapping suggestions, translates natural language to logic, identifies patterns, and orchestrates mapping, reducing manual effort. Learn more about AI data mapping.

- Q: We struggle with transformation rules using simple importers (like Flatfile/OneSchema). How do modern platforms help?

- A: Simple importers limit transformation. If you need complex business logic post-upload, modern platforms act as powerful Flatfile alternatives for complex logic or OneSchema alternatives for transformations, providing dedicated environments for implementation teams.

- Q: How can we speed up client data onboarding and automate transformation rules?

- A: Modern platforms use AI mapping, reusable templates, and visual interfaces to reduce setup time, automate rules, and speed up client data onboarding compared to manual methods or scripting.

Conclusion: Understand the Categories to Conquer Data Complexity

Selecting the appropriate category from this data transformation tools guide is the foundational step towards implementation success. Relying on mismatched tools – whether attempting complex logic in spreadsheets or using cumbersome enterprise ETL for agile file processing – inevitably leads to inefficiency, errors, and project delays.

Modern Data Transformation & Mapping Platforms have emerged specifically to fill the critical gap for implementation teams. They offer the necessary power for complex file transformations and validation, combined with the accessibility, reusability, and AI-driven efficiency that modern onboarding and migration workflows demand. Understanding how this category compares to others empowers you to make strategic technology choices.

By grasping the distinct landscape outlined in this guide, you can confidently identify the tool types that best align with your team's specific operational needs and technical capabilities, paving the way for smoother implementations, higher data quality, and ultimately, greater client satisfaction.

Ready to explore a platform purpose-built for implementation team challenges? Discover DataFlowMapper's capabilities.

Frequently Asked Questions

Can't I just use Python/Pandas for everything?▼

You can, but consider the total cost of ownership: developer dependency, significant maintenance challenges ("knowledge silos"), lack of UI for mapping/validation feedback, slower iteration cycles, and difficulty for non-coders to participate or troubleshoot. Modern platforms often provide the power of Python within a more manageable, collaborative, visual, and efficient framework, drastically reducing these overheads.

Are Modern Platforms just lightweight ETL tools?▼

While they share transformation power, their focus and architecture are different. They are optimized for the specific workflow of processing diverse *files* (CSV/Excel/JSON) common in client onboarding, featuring accessible visual logic builders, advanced validation engines tailored for implementation teams, integrated AI assistance, and rapid iteration capabilities, often without the heavy infrastructure and setup complexity of enterprise ETL. For a detailed breakdown, see our [ETL vs Importer vs Advanced Platform comparison](/blog/etl-vs-import-vs-advanced-data-onboarding-tools).

How secure are these Modern Platforms with client data?▼

Reputable platforms prioritize security with measures like encryption (at rest and in transit), granular access controls, audit logs, and compliance certifications (e.g., SOC 2). Always review a specific vendor's security documentation and practices. DataFlowMapper, for instance, is built with security best practices at its core (see our [Security Page](/security)).

Can these tools handle large files?▼

Capabilities vary, but modern platforms are generally designed to handle file sizes common in implementation scenarios (often millions of rows, GBs of data), far exceeding spreadsheet limits and often rivaling script performance for typical onboarding tasks. Check specific platform specifications and architecture (e.g., streaming processing).

How does AI actually help in data mapping and transformation?▼

AI significantly accelerates the process by: analyzing source/target schemas to **suggest likely mappings**; translating natural language descriptions into ready-to-use **transformation logic (code or visual)**; identifying data patterns for cleaning/validation; and even **orchestrating the entire mapping process** based on high-level requirements. This reduces tedious manual work and speeds up configuration dramatically. [Learn more about AI data mapping](/blog/ai-powered-data-mapping).

We're using a simple importer like Flatfile/OneSchema, but struggle with transformation rules. How do modern platforms help?▼

Simple importers excel at the end-user upload experience but intentionally limit transformation capabilities. If you need to apply **complex business logic** (e.g., conditional calculations, data restructuring, lookups) after the upload as part of the implementation process, you need a different tool category. Modern platforms act as a powerful **Flatfile alternative for complex logic** or **OneSchema alternative for transformations**. They provide dedicated visual and code-based environments for implementation teams to build, manage, and reuse these intricate transformation rules.

How can we speed up client data onboarding and automate transformation rules?▼

Modern platforms tackle this directly. **AI data mapping** features suggest mappings and can even generate transformation logic from plain English, drastically reducing setup time. Reusable templates allow you to save complex mapping and validation configurations, making subsequent onboardings much faster. The visual nature allows more team members to participate, reducing developer bottlenecks. This combination helps automate data transformation rules and significantly speed up **client data onboarding** compared to manual methods or scripting.

The visual data transformation platform that lets implementation teams deliver faster, without writing code.

Start mappingNewsletter

Get the latest updates on product features and implementation best practices.