What is a CSV File? The Ultimate Guide for Data Transformation

Mastering CSV Files: The Ultimate Guide for Data Transformation, Onboarding & Migration

Introduction

In the vast landscape of data exchange, data migration, and data onboarding, few formats are as ubiquitous yet deceptively complex as the Comma-Separated Values (CSV) file. From simple contact lists to intricate datasets driving critical software data migration projects, the humble '.csv' extension is everywhere. But its apparent simplicity masks significant challenges. While easy to generate, reliably consuming, validating, and transforming CSV files—especially when dealing with diverse client data or legacy systems—can quickly become a major bottleneck for implementation teams and data professionals.

This comprehensive guide aims to be the definitive resource on CSV files, moving far beyond a basic csv file definition. We won't just cover what a CSV is, but delve deep into why handling them effectively is often difficult and how to navigate the complexities. We'll explore the critical nuances of delimiters, quoting, encoding, and line endings, dissect common pitfalls, and critically examine the multi-stage process of importing CSV data, focusing on the often-underestimated hurdles in data mapping, data validation, and data transformation. Whether you're executing a complex data migration process, managing customer data onboarding, or simply trying to make sense of exported reports, mastering CSV intricacies is essential.

- Key Takeaways:

- CSV is a text format for tabular data, but its lack of strict standards leads to variations (delimiters, encoding, quoting) demanding robust parsing.

- Real-world CSV import is a multi-stage process: Parsing, Data Mapping, Data Validation, and Data Transformation are crucial steps beyond simple file opening.

- Significant challenges lie in data validation automation (enforcing complex business rules, ensuring referential integrity via lookups) and flexible data transformation (cleaning, enrichment, restructuring).

- Common pitfalls like encoding errors (Mojibake), delimiter conflicts, quoting mistakes, and spreadsheet auto-formatting issues can corrupt data silently.

- Successfully managing data migration, data conversion, and customer data validation often requires specialized data onboarding solutions, data transformation platforms, or ETL tools for data migration capable of handling these complexities, far exceeding the capabilities of basic csv upload tools.

- Understanding data onboarding best practices for CSV handling is vital for efficiency and data quality.

What is a CSV file? (The Deep Dive)

At its core, a CSV file is a plain text file designed to store tabular data—information organized neatly into rows and columns. Each line in the file typically represents a single row (or record), and within that row, individual values (or fields) are separated by a specific character known as the delimiter.

Structure Breakdown:

- Rows (Records): Each line of text corresponds to one record or row in the table. Line breaks (special invisible characters) separate one row from the next.

- Columns (Fields): Within each row, individual data points (fields) are separated by the delimiter character.

Crucial Details – Where Simplicity Ends and Complexity Begins:

The elegance of the CSV concept lies in its simplicity. However, the absence of a single, rigidly enforced universal standard introduces significant variations. Understanding these nuances is critical for anyone building or using a csv importer with etl capabilities or performing any serious data work:

-

Delimiters (The Separator):

- Comma (','): The namesake and most frequently encountered delimiter.

- Tab ('\t'): Common enough that files using tabs are often called TSV (Tab-Separated Values).

- Semicolon (';'): Often used in European locales where the comma serves as the decimal separator in numbers.

- Pipe ('|'): Sometimes chosen specifically to avoid conflicts when commas are expected within the data fields themselves.

- Custom Delimiters: Less common, but technically possible (e.g., '~', '^').

- The Challenge: The core problem arises when the delimiter character itself needs to appear within a data field (e.g., an address like "123 Main St, Anytown" in a comma-delimited file). This ambiguity necessitates quoting mechanisms and poses a challenge for naive data import tools. Robust parsing requires either explicit delimiter specification or sophisticated detection algorithms.

-

Quoting (Handling Ambiguity):

- Purpose: To enclose fields that contain the delimiter character, line breaks, or leading/trailing whitespace that must be preserved.

- Standard Convention: Double quotes ('"') are the most widely accepted quoting character. A field like '"Doe, John"' clearly indicates the comma is part of the name, not a field separator.

- Escaping Quotes Within Quotes: If a double quote character needs to appear inside a quoted field, the standard practice (defined in RFC 4180) is to double it up. For instance, the text 'He said, "Hello"' would be represented in a CSV field as '"He said, ""Hello"""'.

- Variations & Pitfalls: Not all CSV generators or parsers adhere strictly to this standard. Some might use backslashes ('\') for escaping (non-standard), while others might fail to quote fields correctly, leading to broken rows or data corruption during import. Inconsistent quoting within a single file is a major source of parsing errors.

- Raw Example (Illustrating Quoting):

ID,Name,Address,Notes 1,"Doe, John","123 Main St, Anytown","Standard case" 2,"Smith, Jane","456 ""Oak"" Ave","Address contains escaped quotes" 3,"O'Malley, Pat","789 Elm St","Note with a line break"

-

Headers (Column Names):

- Presence: Most CSV files include a header row as the very first line. This row provides human-readable names for the columns.

- Significance: Headers are crucial context, defining the meaning of the data in each subsequent row. They are fundamental for the data mapping stage of any import process.

- Challenges: Headers aren't guaranteed. Some files lack them entirely, requiring external documentation or prior knowledge of the schema. Headers can be inconsistent across files from the same source (e.g., 'Email' vs. 'email_address' vs. 'E-Mail'). They might contain spaces, special characters, or vary in case ('UserID' vs 'user_id'), complicating automated mapping. Relying on a specific data mapping tool that handles these inconsistencies is often necessary.

-

Encoding (Representing Characters): (Critical Section)

- What it is: Encoding is the system used to translate characters (letters, numbers, symbols like '€' or 'ü') into the bytes stored in the file.

- Common Encodings & Their Issues:

- ASCII: The most basic, covering only English letters, numbers, and common symbols. Cannot represent accented characters (like 'é', 'ñ') or characters from non-Latin alphabets (like Cyrillic, Kanji). Files saved as ASCII will corrupt such characters.

- UTF-8: The dominant standard for the web and modern systems. Highly recommended as it can represent virtually any character from any language. Potential Pitfall: Sometimes includes an invisible Byte Order Mark (BOM) at the very beginning of the file, which can confuse some older parsers or scripts not expecting it. UTF-8 without BOM is often the safest bet for broad compatibility.

- Latin-1 (ISO-8859-1): Covers most Western European languages but lacks symbols like the Euro (€) and characters from Eastern European or non-European languages.

- Windows-1252: A common Microsoft extension of Latin-1, frequently encountered on older Windows systems. Adds some characters like the Euro sign but still has limited language support compared to UTF-8.

- The Consequence of Mismatch: This is one of the most frequent and frustrating CSV problems. If a file is saved using one encoding (e.g., UTF-8 containing 'Jörg') but read using another incompatible encoding (e.g., ASCII or Latin-1), characters outside the reader's supported set become garbled nonsense. This is often called Mojibake (e.g., 'Jörg' might appear as 'Jörg'). This issue plagues data conversion efforts if not handled correctly.

- Detection & Specification: Reliably auto-detecting encoding is notoriously difficult. The best practice is to know the encoding of the source file or, ideally, to explicitly specify the encoding (preferably UTF-8) when both writing and reading CSVs.

-

Line Endings (Marking Row Breaks):

- What they are: Invisible control characters signaling the end of one row and the beginning of the next.

- Variations:

- CRLF ('\r\n'): Carriage Return followed by Line Feed. The standard on Windows.

- LF ('\n'): Line Feed. The standard on Unix, Linux, and modern macOS.

- CR ('\r'): Carriage Return. Used in classic Mac OS (pre-OS X), now rare.

- Potential Issues: While most modern parsers and tools handle different line endings gracefully, older or very strict tools might fail if they encounter unexpected line endings. Inconsistent line endings within the same file (e.g., some rows ending in LF, others in CRLF) can also cause parsing failures.

RFC 4180: The "Standard" That Isn't Always Followed

RFC 4180 is a document that attempts to standardize the CSV format. It recommends comma delimiters, CRLF line endings, and the double-quote ('""') mechanism for escaping quotes within fields. However, it leaves some aspects open (like handling of headers) and, more importantly, real-world CSV files frequently deviate from RFC 4180. Relying solely on strict RFC 4180 compliance when parsing arbitrary CSVs encountered during data migration or data onboarding is often a recipe for failure. Always anticipate and be prepared to handle variations.

Examples Illustrating Variations:

- Simple CSV (Comma, CRLF, UTF-8 - Raw Text):

ID,FirstName,LastName 1,John,Doe 2,Jane,Smith - Quoted CSV (Comma, CRLF, UTF-8 - Raw Text):

ID,Name,Address 1,"Doe, John","123 Main St, Anytown" 2,"Smith, Jane","456 ""Oak"" Ave" - Semicolon Delimited, UTF-8 (Raw Text):

ID;Name;City 1;Jörg;München 2;François;Paris

Mastering these fundamental structural elements and their potential variations is the essential first step toward effectively processing CSV files in any demanding data onboarding process or migration project.

Where are CSV Files Used? (Applications Across Industries)

The inherent simplicity and text-based nature of CSV have cemented its role across an incredibly diverse spectrum of software applications, serving as a common format for both data import and export.

- Spreadsheet Software:

- Examples: Microsoft Excel, Google Sheets, LibreOffice Calc, Apple Numbers.

- Use: The default tools for many users to open, view, manually edit, and create CSVs via a familiar tabular interface.

- Caveats: Prone to data corruption via auto-formatting (see Pitfalls section). Struggle with large file sizes common in enterprise scenarios. Often guess encoding/delimiters incorrectly. Not suitable for automated, complex data transformation or robust data validation automation.

- Databases:

- Examples: PostgreSQL, MySQL, SQL Server, Oracle, SQLite, Data Warehouses.

- Use: Virtually all database systems provide mechanisms to bulk import data from CSV files (e.g., SQL 'COPY FROM', 'LOAD DATA INFILE', GUI wizards) and to export query results into CSV format.

- Context: Fundamental for initial data loading, periodic updates, or extracting data for analysis or reporting – core activities in etl data migration.

- Business Systems (CRM, ERP, Accounting, Marketing):

- Examples: Salesforce, HubSpot, SAP, NetSuite, QuickBooks, Xero, Marketo, Mailchimp.

- Use: CSV is frequently the format of choice for bulk operations: uploading/updating contact lists, product catalogs, sales figures, financial transactions, or exporting custom reports. It often acts as the essential bridge for moving data between disparate business systems, making reliable CSV handling crucial for crm data migration tools.

- Data Analysis & Business Intelligence (BI) Tools:

- Examples: Python (with Pandas, Polars libraries), R, Tableau, Power BI, Qlik Sense, Alteryx.

- Use: CSV remains a primary input format for data analysis and visualization. These tools offer powerful capabilities for parsing, cleaning, transforming, analyzing, and visualizing CSV data, often forming part of a larger data and ai platform.

- Programming Languages & Development:

- Examples: Python ('csv' module, Pandas), JavaScript (Node.js libraries like 'csv-parser'), Java (Apache Commons CSV), C#, Ruby, PHP.

- Use: Standard libraries and extensive third-party packages provide developers with robust tools to programmatically read, write, and manipulate CSV files, offering fine-grained control essential for building custom applications or scripts.

- Data Integration & ETL (Extract, Transform, Load) Tools:

- Examples: Informatica, Talend, Apache NiFi, AWS Glue, Azure Data Factory, and specialized data onboarding platforms like DataFlowMapper.

- Use: These tools are purpose-built for orchestrating data migration and data transformation workflows between various systems. CSV is a cornerstone source and target format. Advanced ETL tools for data migration provide sophisticated features specifically designed to handle CSV complexities like schema detection/evolution, complex validation rules, intricate transformations, and automated data mapping automation. They often represent the best data migration tools for handling challenging, large-scale CSV imports.

- Client Data Onboarding:

- Context: A critical business process where companies receive data from new customers (e.g., user lists, product details, historical transactions) typically in CSV format, needing to load it into their own platform. This process invariably requires significant data cleaning, rigorous validation against the platform's rules (customer data validation), and often complex transformation to match the target schema. This is a primary focus for dedicated data onboarding tools and data onboarding solutions.

- System Migrations & Legacy Conversion:

- Context: Extracting data from outdated (legacy) systems, frequently into CSV format, as an intermediary step before cleaning, transforming, and importing it into modern replacement systems. This often involves specialized legacy conversion tools or requires a well-defined data migration methodology heavily reliant on robust CSV processing.

- Other Diverse Use Cases:

- Data Warehousing: Loading operational data feeds.

- Report Generation: Exporting data from applications lacking sophisticated reporting APIs.

- Configuration Files: For simpler applications or settings.

- Scientific & IoT Data Logging: Storing time-series data or experimental results.

This widespread adoption means professionals involved in data migration or onboarding data will inevitably encounter CSVs generated by countless systems, each potentially introducing unique quirks and interpretations of the format, demanding adaptable and powerful data transformation software.

Benefits and Drawbacks of CSV Files (A Balanced Perspective)

CSV's enduring popularity is understandable, but acknowledging its limitations is crucial, especially when evaluating it for complex tasks like data onboarding or large-scale migrations.

Benefits:

- Simplicity (Superficial): The basic row/column, delimiter-separated structure is easy to grasp conceptually.

- Human-Readability: For small, simple files without complex quoting, the raw content can be easily inspected in a basic text editor.

- High Compatibility: As plain text, CSV files are largely independent of operating systems and applications. Most tools that handle data can import or export CSV in some form.

- Compactness: Compared to more verbose formats like XML or JSON, CSVs generally consume less disk space for the same amount of flat, tabular data.

- Ease of Generation (Simple Cases): Generating basic CSVs programmatically or via spreadsheet "Save As" is straightforward for simple datasets.

Drawbacks:

- Lack of Strict Data Typing: CSV has no built-in mechanism to define whether a column should be treated as text, a number, a date, or boolean. Everything is initially text. The consuming application must infer or be explicitly configured, leading to frequent interpretation errors (e.g., '0123' becoming the number 123, '5/10' becoming a date). This significantly complicates reliable data validation.

- No Standard for Metadata/Schema: There's no standard way to embed metadata (like data types, descriptions, constraints, relationships between columns) within the CSV file itself. The schema is implicit or requires separate documentation, making automated processing fragile.

- Ambiguity & Lack of Standardization: As detailed extensively, variations in delimiters, quoting rules, encoding, and line endings create significant ambiguity. Parsing reliably requires guessing, user configuration, or sophisticated detection logic, a major hurdle for basic csv upload tools.

- Poor Handling of Hierarchical/Nested Data: CSV is inherently flat (two-dimensional). Representing nested objects or parent-child relationships (like customer orders with multiple line items) is awkward, often requiring denormalization or complex, non-standard workarounds. JSON or XML are far better suited for such structures.

- Scalability Challenges: While text-based, parsing very large CSV files (gigabytes or more) can overwhelm the memory and CPU resources of simple tools or naive scripts. Spreadsheet applications frequently crash. Processing large files efficiently requires specialized techniques (streaming, chunking) often found in dedicated etl tools for data migration.

- Inadequate for Complex Validation & Transformation: The format itself cannot enforce business rules (e.g., "discount must be < 20% if customer type is 'Retail'") or perform data manipulations (e.g., "combine FirstName and LastName into FullName"). These critical steps must occur after parsing, demanding external logic often provided by data transformation platforms or data validation automation tools.

- Potential for Inconsistencies: It's easy to generate CSVs with subtle errors like inconsistent quoting, missing fields in some rows, or mixed data types within a column, making robust, automated parsing a significant challenge.

In essence, CSV often serves as the "lowest common denominator" for data exchange—widely supported but lacking the structural richness, data integrity features, and standardization needed for complex, reliable data workflows without the aid of sophisticated external tooling.

How to Open and Edit a CSV File (Practical Guide & Critical Pitfalls)

While seemingly straightforward, opening and editing CSVs requires caution to avoid inadvertently corrupting data, especially when dealing with files destined for data migration or onboarding.

-

Spreadsheet Software (Excel, Google Sheets, LibreOffice Calc):

- Pros: Provides a familiar, user-friendly grid view. Easy for manual sorting, filtering, and simple edits.

- Cons (CRITICAL PITFALLS):

- Silent Data Corruption via Auto-Formatting: Excel, in particular, is notorious for automatically changing data upon opening. It frequently drops leading zeros from identifiers (e.g., '00789' becomes '789'), converts long numeric strings (like IDs or credit card numbers) to imprecise scientific notation (e.g., '1234567890123456' becomes '1.23E+15'), or misinterprets text/numbers as dates ('10-12' might become '12-Oct'). This corruption is often silent and irreversible once saved.

- Incorrect Encoding/Delimiter Guessing: Spreadsheets often try to guess the file's encoding and delimiter. If they guess wrong (a common occurrence), you'll see garbled text (Mojibake) or data incorrectly split across columns.

- Performance Limitations: Opening very large CSV files (common in enterprise data migration scenarios) can cause spreadsheets to become extremely slow, unresponsive, or crash entirely.

- Hiding Problematic Characters: The graphical interface can obscure subtle issues like non-standard whitespace or invisible control characters that might cause failures in downstream systems.

- Mitigation Strategy (Essential): NEVER just double-click a CSV file to open it in Excel if data integrity matters. Instead, use the dedicated data import feature:

- Excel: Go to 'Data' > 'Get Data' > 'From File' > 'From Text/CSV'.

- Google Sheets: Go to 'File' > 'Import' > 'Upload'.

- These import wizards allow you to explicitly specify the file origin (encoding), delimiter, and—most importantly—to preview the data and set the data type for each column. Set columns containing IDs, ZIP codes, phone numbers, or any numeric-looking text that must not be treated as a number to "Text" during import to prevent auto-formatting corruption.

-

Text Editors (Notepad++, VS Code, Sublime Text, BBEdit, Vim, etc.):

- Pros: Show the raw, unadulterated content of the file, exactly as it is stored. Essential for diagnosing parsing problems caused by incorrect delimiters, faulty quoting, invisible characters, or encoding issues. Offer complete control over edits without any risk of auto-formatting. Many support syntax highlighting for CSV/TSV and allow explicit setting/conversion of file encoding.

- Cons: Not designed for viewing or manipulating large amounts of data in a tabular format. Editing requires understanding the raw CSV structure (commas, quotes, etc.).

-

Command-Line Tools (Linux/macOS/Windows Subsystem for Linux/Git Bash):

- Pros: Extremely efficient for quickly inspecting parts of large files without loading the whole thing (e.g., 'head' to see the first few lines, 'tail' for the last few). Powerful for searching ('grep'), counting lines/words/characters ('wc'), or performing text manipulations using tools like 'awk', 'sed', or 'csvkit'. Excellent for scripting basic checks or transformations.

- Cons: Requires familiarity with the command line interface. Not suitable for complex, interactive visual editing.

-

Programmatic Access (Python with Pandas/Polars, R, etc.):

- Pros: Offers the ultimate control and flexibility for reading, writing, validating, and transforming CSV data, especially within automated workflows, complex analyses, or custom application development. Libraries handle many parsing nuances automatically.

- Cons: Requires programming skills.

Recommendations:

- For quick viewing or very simple edits where data integrity is paramount: Always use the "Import Text/CSV" wizard in spreadsheet software and explicitly set column types.

- For diagnosing structural problems, encoding issues, or making precise edits: Use a good text editor.

- For automated processing, complex validation/transformation, or handling large files: Use programming languages/libraries or dedicated data onboarding/ETL tools.

How to Create a CSV File (Best Practices for Reliability)

Generating clean, predictable, and reliable CSV files is just as important as parsing them correctly, especially when providing data for external systems or data onboarding processes.

-

From Spreadsheets ("Save As" / "Download As"):

- Method: Use the built-in export functions ('File > Save As > CSV' or 'File > Download > Comma-separated values (.csv)').

- Limitations & Risks: You often have very limited control over crucial output parameters.

- Delimiter: Usually fixed to comma, cannot easily specify tab, pipe, etc.

- Encoding: Excel, particularly on Windows, often defaults to older encodings like Windows-1252 or adds an unnecessary UTF-8 BOM, causing downstream compatibility issues. Google Sheets generally offers better encoding control (UTF-8 is usually the default).

- Quoting: Minimal control over quoting rules (e.g., cannot easily force quoting of all fields).

- This method is often insufficient for generating CSVs that meet specific requirements for reliable data exchange.

-

Programmatically (Recommended for Control & Reliability):

- Method: Utilize dedicated CSV writing libraries available in your chosen programming language (e.g., Python's built-in 'csv' module, or the 'to_csv' method in libraries like Pandas or Polars).

- Advantages: Provides explicit, fine-grained control over all critical aspects of the output format:

- Delimiter: Specify comma, tab, pipe, semicolon, or any custom character.

- Quoting: Define precisely when fields should be quoted (e.g., 'QUOTE_MINIMAL' - only when necessary due to delimiters/quotes/line breaks; 'QUOTE_NONNUMERIC' - quote all text fields; 'QUOTE_ALL' - quote every field). Specify the quote character ('"' is standard) and the escape mechanism ('""' is standard).

- Encoding: Explicitly set the encoding to 'utf-8' (without BOM) for maximum compatibility with modern systems and correct handling of international characters.

- Line Endings: Specify '\n' (LF) or '\r\n' (CRLF) if a specific format is required (LF is generally more cross-platform compatible). Libraries often handle this automatically based on the OS or allow specification.

- Header: Easily include or exclude the header row.

- Example (Python using built-in 'csv' module):

import csv data_to_write = [ {'ID': 1, 'Name': 'Jörg Müller', 'Notes': 'Contains comma, and non-ASCII chars'}, {'ID': 2, 'Name': 'Jane "Quick" Smith', 'Notes': 'Includes "quotes"'} ] headers = ['ID', 'Name', 'Notes'] # Best practice: Use 'utf-8' encoding, standard quoting, specify newline='' with open('output_reliable.csv', 'w', newline='', encoding='utf-8') as csvfile: # QUOTE_MINIMAL is usually efficient and standard writer = csv.DictWriter(csvfile, fieldnames=headers, delimiter=',', quoting=csv.QUOTE_MINIMAL) writer.writeheader() writer.writerows(data_to_write) -

Exporting from Databases or Business Systems:

- Method: Use the native export functionalities provided by the database or application.

- Considerations: Carefully examine the available export options. Can you configure the delimiter, encoding (look for UTF-8!), and quoting rules? Always test the output file rigorously to ensure it meets the requirements of the intended consuming system or process. Don't assume the defaults are optimal.

Best Practices Checklist for Creating Reliable CSVs:

- Use UTF-8 Encoding: The gold standard for compatibility. Avoid the BOM unless explicitly required by a specific (likely older) target system.

- Be Consistent: Use the same delimiter, quoting strategy, and line endings throughout the entire file.

- Quote Appropriately & Correctly: Use standard double quotes ('"') to enclose fields containing the delimiter, internal quotes, or line breaks. Escape internal double quotes by doubling them ('""'). 'QUOTE_MINIMAL' is often the best balance between safety and file size.

- Include a Clear & Consistent Header Row: Use descriptive, unambiguous column names. Avoid special characters, excessive spaces, or leading/trailing spaces in headers. Keep header names consistent across different exports if possible.

- Document the Format: When sharing CSVs, provide clear documentation specifying the exact delimiter, encoding (confirm UTF-8!), quoting rules used, and definitions/expected data types for each column. Don't force consumers to guess – this is a key part of data onboarding best practices.

- Handle Null/Empty Values Consistently: Represent empty or null values simply as two consecutive delimiters (e.g., 'field1,,field3') unless the target system specifically requires quoted empty strings ('""') or special markers like 'NULL' or 'N/A'. Avoid mixing representations.

Adhering to these practices dramatically increases the probability that your CSV files will be parsed correctly and reliably by downstream systems and data import tools.

The Real Challenge: Importing, Validating, and Transforming CSV Data

Successfully integrating CSV data into business systems—especially during critical processes like customer data onboarding, software data migration, or legacy conversion—is far more complex than just parsing delimiters and quotes. It's a multi-stage workflow where the real difficulties often lie in ensuring data quality and conforming data to target system requirements. This is where basic tools falter and the need for robust data onboarding platforms, data transformation platforms, or capable ETL tools for data migration becomes undeniable.

The Typical Multi-Stage Import Workflow:

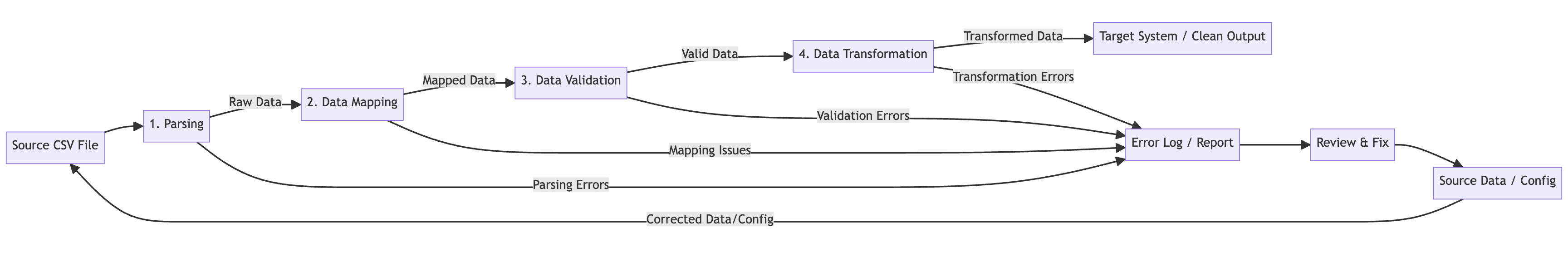

Diagram: The iterative process of importing CSV data, highlighting mapping, validation, transformation, and error handling loops.

-

Parsing:

- Goal: Correctly interpret the raw byte stream according to the file's specific structure (Encoding, Delimiter, Quoting, Line Endings, Headers).

- Challenges: Incorrect guessing of these parameters leads to immediate failure or silently corrupted data from the outset. Requires robust, configurable parsers or sophisticated detection heuristics often missing in simple scripts or basic tools.

-

Data Mapping:

- Goal: Associate columns from the source CSV file with their corresponding fields or columns in the target system (database table, application schema, API endpoint).

- Challenges:

- Header Ambiguity: Source headers ('Cust_FName', 'E-Mail') rarely match target fields ('FirstName', 'ContactEmail') exactly. Case sensitivity, spaces, and special characters add complexity.

- Missing/Extra Columns: Source files might lack data required by the target or contain irrelevant columns that need to be ignored.

- Manual Effort: For files with tens or hundreds of columns (common in enterprise data), manually creating and maintaining these mappings is incredibly tedious, time-consuming, and error-prone.

- Structural Mismatches: Mapping flat CSV data to potentially nested target structures (like JSON) requires specific logic.

- Modern Solutions: Effective data mapping tools are essential. Look for:

- Visual Interfaces: Drag-and-drop mapping simplifies the process.

- Fuzzy Matching/Suggestions: Tools that automatically suggest mappings based on header names and data patterns, even with slight variations.

- AI Data Mapping Tools: Leveraging AI to analyze source/target schemas and data can significantly accelerate mapping, providing high-confidence suggestions that users only need to review and approve/adjust. This drives data mapping automation.

- Handling Nested Structures: Support for defining mappings from flat sources to nested targets such as the JSON formats often required by modern APIs. This requires transformation logic and mapping capable of constructing these hierarchies, going beyond simple, flat column-to-column links.

-

Data Validation: (Crucial Expansion - The Data Quality Gatekeeper)

- Goal: Ensure the data not only parses correctly but also adheres to business rules, quality standards, and integrity constraints before it pollutes the target system. This goes far beyond basic format checks and is central to effective customer data validation.

- Essential Validation Types Often Missing in Basic Importers:

- Basic Checks: Data Type (Is 'Price' numeric? Is 'StartDate' a valid date?), Format (Is 'PostalCode' 'NNNNN' or 'A1A 1A1'? Is 'Email' syntactically valid?), Range ('Quantity' > 0?), Length ('ProductCode' = 8 chars?).

- List Lookups/Enumerations: Does the 'CountryCode' exist in an approved list ('US', 'CA', 'MX')? Does 'Status' belong to ['Pending', 'Shipped', 'Cancelled']?

- Cross-Field Consistency: Is the 'InvoiceDueDate' after the 'InvoiceDate'? Does the sum of 'ItemPrice' * 'Quantity' match the 'LineTotal'?

- Uniqueness Checks: Is this 'OrderNumber' already present in the target database? Is this 'EmailAddress' duplicated earlier within the same file?

- Referential Integrity (Critical & Complex): Does the 'CustomerID' listed in an orders CSV actually exist in the main customers table/API? Does the 'ProductID' exist in the product catalog? This often requires performing lookups against external data sources (databases, APIs, other files) during the validation step.

- Custom Business Logic: Implementing arbitrary rules specific to the business domain (e.g., "Discount percentage cannot exceed 15% for non-preferred customers located in California").

- The Problem: Basic csv importers or spreadsheet functions offer only the most rudimentary validation capabilities. They typically cannot perform external lookups, enforce complex cross-field rules, or handle custom business logic effectively.

- The Need: True data validation automation requires a powerful validation engine. Look for tools that allow defining validation rules through:

- Configuration: Setting types, ranges, patterns (regex).

- Visual Logic Builders: Creating conditional rules without code.

- Scripting: Allowing custom code (e.g., Python) for maximum flexibility.

- Lookup Capabilities: The ability to query external databases or APIs during validation is paramount for checking referential integrity and performing complex checks.

-

Data Transformation: (Crucial Expansion - Shaping Data for Its Destination)

- Goal: Modify, clean, enrich, reformat, and restructure the incoming CSV data to precisely match the requirements and schema of the target system. This is often tightly coupled with validation.

- Common Transformation Needs in Data Migration/Onboarding:

- Cleaning: Trimming leading/trailing whitespace, converting text case (UPPER, lower, Proper), replacing special characters, standardizing inconsistent values (e.g., 'USA', 'U.S.A.', 'United States' -> 'US'). Often features of data cleaning software.

- Type Conversion: Explicitly converting text representations ('$1,234.56', '10/05/2024') into target data types (numeric, date/datetime).

- Calculations & Derived Fields: Creating new fields based on existing ones (e.g., 'FullName = FirstName + ' ' + LastName', 'Margin = (SalePrice - Cost) / SalePrice', 'Age = CurrentDate - BirthDate').

- Restructuring: Splitting a single column ('City, State Zip' -> 'City', 'State', 'Zip'), combining multiple columns, basic pivoting (rows to columns) or unpivoting (columns to rows).

- Conditional Logic: Applying different transformation rules based on data values (e.g., 'IF Country == 'US' THEN FormatPhoneNumber(Phone) ELSE IF Country == 'UK' THEN FormatUKPhone(Phone) ELSE Phone').

- Data Enrichment (Requires Lookups): Augmenting the data by fetching additional information from external sources based on a key in the CSV. Examples: Looking up 'Sales Region' based on 'Zip Code' via an API; fetching 'Product Category' from a database based on 'SKU'; adding 'Account Manager Email' based on 'CustomerID'.

- The Problem: Simple import tools or spreadsheets offer minimal transformation capabilities. Performing these tasks manually or with basic scripts is extremely time-consuming, error-prone, and difficult to maintain or reuse across different files or projects.

- The Need: Requires flexible and powerful data transformation software. Key features to look for:

- Rich Function Library: Built-in functions for common cleaning, math, date, and text operations.

- No Code Data Transformation Interfaces: Visual builders (drag-and-drop, flowcharts) make defining transformations accessible without programming, often making them the easiest data transformation software for common tasks.

- Scripting Support: The ability to inject custom code (e.g., Python) for highly complex or unique logic provides ultimate flexibility.

- Lookup Capabilities: The ability to query external databases or APIs during the transformation process is critical for data enrichment.

-

Error Handling & Reporting:

- Goal: Define a clear strategy for handling rows that fail parsing, validation, or transformation, and provide actionable feedback to the user.

- Strategies: Reject the entire file on first error; skip invalid rows and process valid ones; log errors comprehensively; quarantine failed rows for later review and correction.

- Importance: Vague error messages ("Error on line 50") are useless. Effective error handling provides specific details: row number, column name/index, the problematic value, and a clear description of why it failed (e.g., "Validation failed: Email format invalid", "Transformation failed: Lookup for ProductID 'XYZ' returned no results"). This allows users to efficiently diagnose and fix issues in the source data or the import configuration.

Connecting the Dots: Why Specialized Tools Are Needed

Manually performing these intricate mapping, validation, transformation, and error handling steps using spreadsheets or basic scripts is incredibly inefficient, prone to errors, and lacks repeatability. This is a major source of frustration and delay for implementation teams handling regular client data onboarding or executing large-scale data migration projects. The inherent limitations of the CSV format itself, combined with the complexity of real-world business rules and data quality issues, clearly demonstrate the value proposition of dedicated data onboarding solutions, comprehensive data transformation platforms, or robust ETL tools for data migration. These tools are specifically designed to automate and streamline these challenging stages, providing the necessary power and flexibility that basic tools lack.

Common CSV Pitfalls In-Depth (Avoiding Costly Mistakes)

Being aware of these frequent issues during data conversion or import can save countless hours of debugging and prevent data corruption:

- Encoding Errors (Mojibake):

- Problem: Text appears garbled (e.g., 'Stück' becomes 'Stück', '€' becomes '€') because the file was saved in one encoding (like UTF-8) but read using an incompatible one (like Latin-1 or Windows-1252).

- Solution: Know or explicitly specify the encoding! When creating CSVs, always save as UTF-8 (preferably without BOM). When reading, configure your tool or script to interpret the file as UTF-8 first. If errors persist, try common alternatives (Latin-1, Windows-1252) based on the suspected source, but aim for UTF-8 as the standard. Use tools (text editors, import wizards, libraries) that allow explicit encoding control.

- Delimiter Conflicts:

- Problem: The character used as the delimiter (e.g., comma) also appears naturally within data fields that haven't been properly quoted (e.g., '123 Main Street, Anytown' in a comma-delimited file causes the row structure to break).

- Solution: Ensure data providers correctly quote fields containing the delimiter using standard methods ('"'). When parsing, use robust libraries or tools that correctly handle quoted fields. If you control generation and commas are frequent in your data, consider using a less common delimiter like pipe ('|') or tab ('\t'), but ensure the consuming system can handle it. Always specify the delimiter explicitly during import.

- Quoting/Escaping Mistakes:

- Problem: Unbalanced double quotes (an odd number in a row), incorrect escaping of internal quotes (e.g., using '\"' instead of the standard '""'), or failure to quote fields that contain line breaks. These errors often lead to catastrophic parsing failures where rows get merged or truncated.

- Solution: Adhere strictly to standard quoting ('"') and escaping ('""') when generating CSVs. Use linters or validators if possible. When parsing, rely on mature libraries or dedicated tools designed to handle standard CSV quoting rules robustly, including multi-line fields.

- Header Inconsistencies:

- Problem: Variations in header names across files ('UserID' vs 'User ID' vs 'user_id'), unexpected characters or leading/trailing spaces in headers, or completely missing headers. This breaks automated data mapping.

- Solution: Pre-process headers if necessary (trim whitespace, standardize case, remove special characters). Use mapping tools that offer flexible matching options (case-insensitive, alias mapping, fuzzy matching). If headers might be absent, ensure your process can work with column indices based on a well-defined, documented schema.

- Data Type Interpretation Errors (Especially in Spreadsheets):

- Problem: Software (especially Excel) silently auto-formats data, corrupting it: leading zeros vanish ('00123' -> '123'), long numbers become scientific notation ('1.23E+15'), text gets misinterpreted as dates ('May-10' -> '10-May-YYYY').

- Solution: Crucially, use "Import Text/CSV" wizards in spreadsheets and explicitly define columns containing such sensitive data as "Text". In programmatic parsing, read all data as strings initially, then perform explicit, careful type conversions with robust error handling after initial parsing.

- Large File Performance Bottlenecks:

- Problem: Attempting to load massive CSV files entirely into memory causes applications (especially spreadsheets or naive scripts) to crash or become unusably slow.

- Solution: Process files in chunks or stream them row-by-row. Use tools and libraries specifically designed for handling large datasets (e.g., Pandas with 'chunksize', Polars, command-line tools like 'awk'/'sed', database bulk loading utilities, dedicated ETL tools). Avoid loading the entire file into memory at once.

- Inconsistent Line Endings:

- Problem: Mixing Windows-style CRLF ('\r\n') and Unix-style LF ('\n') line endings within the same file can confuse some simpler parsers, leading to errors or incorrectly merged rows.

- Solution: Use modern parsers/tools that typically handle mixed line endings gracefully (often by normalizing them internally). If necessary, pre-process the file to standardize line endings (e.g., using tools like 'dos2unix'/'unix2dos' or features in good text editors).

- Security Risks (CSV Injection):

- Problem: If CSV data is intended to be opened directly in spreadsheet software, fields starting with characters like '=', '+', '-', or '@' can be interpreted by the spreadsheet as formulas or commands. Maliciously crafted fields could potentially execute arbitrary code, leak data, or perform other harmful actions when the file is opened.

- Solution: Sanitize data before generating CSVs destined for direct spreadsheet use. A common technique is to prepend a single quote (''') to any field starting with these problematic characters; spreadsheets typically treat such fields as literal text while hiding the leading quote. Always be cautious when opening CSV files from untrusted sources directly in spreadsheet applications.

Anticipating and systematically addressing these pitfalls is essential for building robust, reliable software data migration and data onboarding workflows.

CSV vs. Other Formats: Choosing the Right Tool for the Job

While CSV is a workhorse, it's not always the optimal choice. Understanding the strengths and weaknesses of alternatives helps in selecting the most appropriate format based on the specific requirements of your data migration methodology or integration task.

| Feature | CSV (Comma-Separated) | Excel (.xlsx) | JSON (JavaScript Object Notation) | XML (Extensible Markup Language) | TSV (Tab-Separated) | Fixed-Width | Parquet / Avro |

|---|---|---|---|---|---|---|---|

| Structure | Flat Tabular | Rich (Formatting, Formulas, Multi-sheet) | Hierarchical (Key-Value Pairs, Arrays) | Hierarchical (Tags, Attributes) | Flat Tabular | Flat Tabular | Columnar / Row-based (Schema enforced) |

| Data Types | Implicit (Text Only) | Explicit (Number, Date, Text, etc.) | Explicit (String, Number, Boolean, Array, Object, Null) | Implicit (Text, defined by Schema) | Implicit (Text Only) | Implicit (Text, defined by position) | Explicit (Defined in Schema) |

| Schema | None (Implicit/External Doc) | Embedded | None (Implicit/External Doc/Schema) | Optional/Required (DTD, XSD) | None (Implicit/External Doc) | External Definition Required | Required & Embedded |

| Human Readable | Yes (Simple data) | No (Binary) | Yes | Yes (Verbose) | Yes | No (Requires definition) | No (Binary) |

| Standardization | Loose (RFC 4180 often ignored/varied) | Proprietary (Open Spec exists) | Well-defined (RFC 8259) | Well-defined (W3C Standards) | Loose | None (Application specific) | Well-defined (Apache Projects) |

| Complexity | Low (Superficially) / High (Robust Parsing) | High | Medium | High | Low (Superficially) | Medium (Fragile) | Medium (Requires specific libraries) |

| File Size | Compact | Larger (due to formatting etc.) | Medium (Less verbose than XML) | Very Verbose | Compact | Potentially Large (padding) | Very Compact (Compression, Encoding) |

| Use Cases | Simple data exchange, Logs, Basic Data Onboarding (w/ external tools) | Spreadsheets, Reports, User-facing data | Web APIs, Config files, NoSQL DBs, Complex Configs, Nested Data | Config files, SOAP APIs, Doc formats, Enterprise Integration | Data exchange (avoids comma issues) | Legacy systems, Mainframes (Legacy Conversion) | Big Data Analytics, Data Lakes, Efficient Storage |

| Pros | Simple Concept, Compatible, Compact | Rich features, Widely used by end-users | Flexible, Web standard, Good for nesting, Readable | Standardized, Self-describing (w/ schema), Mature | Avoids comma delimiter issues | Efficient for fixed-format parsing | High Performance, Compression, Schema Evolution |

| Cons | Ambiguous (Delimiter/Encoding/Quote), No Types/Schema, Bad for Nesting, Poor for Complex Validation/Transformation | Binary, Proprietary, Large files, Prone to user error | Less compact than CSV, Schema optional | Verbose, Complex parsing | Tabs can be inconsistent, Less standard | Rigid, Fragile to changes, Not readable | Not human-readable, Requires libraries |

Guidance on When to Choose:

- Choose CSV when:

- You need a simple format for flat, tabular data.

- Broad compatibility across different systems is the highest priority.

- File size needs to be relatively small (compared to XML/JSON).

- Crucially: You have a separate, capable tool or process (csv importer with etl, data onboarding platform, custom script) to handle the inevitable complexities of parsing variations, validation, and transformation. Do not choose CSV if you need the format itself to enforce data types or complex rules.

- Choose Excel (.xlsx) when:

- The primary consumers are end-users who need rich formatting, formulas, charts, or multiple sheets within one file.

- The file is mainly for reporting or manual analysis, not for automated system-to-system data exchange.

- (Consider tools to convert xls to csv or convert json to xlsx if interoperability is needed later).

- Choose JSON when:

- Dealing with web APIs (it's the de facto standard).

- Storing configuration files.

- Representing data with nested or hierarchical structures.

- Working extensively within JavaScript ecosystems.

- Choose XML when:

- Integration with legacy enterprise systems (especially SOAP-based) is required.

- Strict standardization and validation against complex, predefined schemas (DTD/XSD) are paramount.

- Working with document-centric data (e.g., XHTML, SVG).

- Choose TSV when:

- Your data frequently contains commas, making comma delimiting problematic.

- You want a simple text-based tabular format and the target system explicitly supports TSV. (Be mindful that tabs within data fields still require proper handling, often quoting).

- Choose Fixed-Width when:

- You absolutely must interface with legacy systems (e.g., mainframes) that demand this specific, rigid, position-based format. Requires precise external documentation of field lengths and positions.

- Choose Parquet / Avro when:

- Dealing with large-scale data analytics (Big Data) in ecosystems like Hadoop or Spark.

- High performance for reads/writes, efficient data compression, and robust schema evolution support are critical requirements.

Selecting the right format upfront can save significant effort and prevent issues during development and integration. CSV's simplicity is appealing, but its limitations must be acknowledged and addressed with appropriate tooling for complex tasks.

Frequently Asked Questions (FAQ)

- Q: How do I handle CSV files with different delimiters (comma, semicolon, tab, pipe)?

- A: Don't rely on tools that assume commas. Use a robust data import tool, library (like Python's 'csv' or Pandas 'read_csv'), or data onboarding platform that allows you to explicitly specify the delimiter character during the parsing stage. Some advanced tools offer auto-detection, but explicit specification is always more reliable when the delimiter is known.

- Q: My CSV data looks garbled (Mojibake)! How do I fix encoding issues?

- A: This is almost certainly an encoding mismatch. The file was likely saved in one encoding (e.g., UTF-8, Windows-1252) but is being read using a different, incompatible one (e.g., ASCII, Latin-1). Solution: Try opening the file in a capable text editor (like VS Code, Notepad++, Sublime Text) that allows you to specify the encoding when opening or reopen with different encodings. Try UTF-8 first, then common regional encodings like Windows-1252 or Latin-1 until the characters display correctly. Best Practice: Standardize on UTF-8 (without BOM) for both generating and reading CSVs whenever possible.

- Q: What's the best way to perform complex data validation on CSV files? Spreadsheets aren't enough.

- A: You're right, spreadsheets fall short. For real business rules, you need a dedicated data validation automation tool, ETL tool, or data onboarding platform. Key features to look for are the ability to: define custom validation logic (via configuration, visual builders, or scripting), check for uniqueness, validate formats (regex), check ranges, enforce list lookups (enumerations), and critically, perform lookups against external databases or APIs to check referential integrity (e.g., does this Customer ID exist?). Clear, row/cell-level error reporting is also essential.

- Q: Can I transform CSV data (clean, calculate, restructure) without writing code?

- A: Yes. Many modern data transformation software solutions and data onboarding platforms offer no code data transformation capabilities. These typically provide visual interfaces (like drag-and-drop workflows or function palettes) allowing users to define cleaning steps (trimming, case changes), perform calculations, apply conditional logic, split/join columns, etc., without programming. This makes them some of the easiest data transformation software for common tasks. However, the best platforms often also allow injecting custom code (like Python) for maximum flexibility when needed for highly complex scenarios.

- Q: How can AI help with the tedious task of CSV data mapping?

- A: AI data mapping tools significantly accelerate the process. They analyze column headers and sample data in your source CSV and compare them to your target schema (database table, API definition). Based on name similarity, data patterns, and learned associations, the AI suggests likely mappings (e.g., automatically proposing that 'Cust_FName' maps to 'FirstName' and 'PurchaseDt' maps to 'OrderDate'). This turns data mapping automation from a fully manual task into one where users primarily review, approve, or slightly adjust AI-generated suggestions, saving immense time, especially for files with many columns.

- Q: What exactly is data onboarding and why is CSV so central to it?

- A: Data onboarding is the critical process of importing external data—often from new customers, partners, or acquired systems—into your primary business platform or application. CSV is frequently the format provided by the external party because it's a common, simple export option for many systems. However, this incoming CSV data rarely matches the target system's schema or quality standards perfectly. Therefore, the onboarding process must involve robust parsing, data mapping, extensive customer data validation against the target system's rules, and often significant data transformation before the data can be successfully loaded and used. This complexity makes effective data onboarding tools or comprehensive data onboarding solutions essential for achieving efficient, accurate, and repeatable onboarding workflows.

Conclusion: Moving Beyond Basic CSV Handling

The Comma-Separated Values format, while simple in concept, presents a landscape rich with nuance and potential pitfalls in practice. Its ubiquity in data migration, legacy conversion, and everyday data exchange guarantees its continued relevance, but its lack of standardization and inherent limitations demand sophisticated handling, especially for business-critical processes.

Mastering CSV truly means acknowledging its shortcomings: the ambiguities in parsing, the absence of embedded schema or data types, and its inadequacy for complex validation or transformation logic. Successfully navigating data onboarding or executing large-scale data migrations requires moving beyond basic parsing and embracing data onboarding best practices. This involves implementing robust, multi-stage workflows that explicitly address data mapping (leveraging AI data mapping where possible), rigorous data validation automation (including custom logic and external lookups), and flexible data transformation (using easy data transform tools or powerful scripting).

Manually wrestling with these challenges using spreadsheets or simple scripts is inefficient, error-prone, and unsustainable. Modern data onboarding platforms, dedicated data transformation platforms, and capable ETL tools for data migration exist precisely because they provide the necessary automation and power to overcome CSV's inherent difficulties. They offer configurable parsing, intelligent mapping assistance, powerful validation engines, flexible transformation builders, and clear error handling—capabilities essential for ensuring data quality and streamlining workflows.

By understanding the deep complexities hidden beneath CSV's simple surface and leveraging the right tools designed to manage that complexity, data professionals, implementation specialists, and migration analysts can avoid the common traps, ensure data integrity, and unlock the value within their CSV data far more effectively and efficiently.

Frequently Asked Questions

How do I handle CSV files with different delimiters (comma, semicolon, tab, pipe)?▼

Don't rely on tools that assume commas. Use a robust data import tool, library (like Python's 'csv' or Pandas 'read_csv'), or data onboarding platform that allows you to explicitly specify the delimiter character during the parsing stage. Some advanced tools offer auto-detection, but explicit specification is always more reliable when the delimiter is known.

My CSV data looks garbled (Mojibake)! How do I fix encoding issues?▼

This is almost certainly an encoding mismatch. The file was likely saved in one encoding (e.g., UTF-8, Windows-1252) but is being read using a different, incompatible one (e.g., ASCII, Latin-1). Solution: Try opening the file in a capable text editor (like VS Code, Notepad++, Sublime Text) that allows you to specify the encoding when opening or reopen with different encodings. Try UTF-8 first, then common regional encodings like Windows-1252 or Latin-1 until the characters display correctly. Best Practice: Standardize on UTF-8 (without BOM) for both generating and reading CSVs whenever possible.

What's the best way to perform complex data validation on CSV files? Spreadsheets aren't enough.▼

You're right, spreadsheets fall short. For real business rules, you need a dedicated data validation automation tool, ETL tool, or data onboarding platform. Key features to look for are the ability to: define custom validation logic (via configuration, visual builders, or scripting), check for uniqueness, validate formats (regex), check ranges, enforce list lookups (enumerations), and critically, perform lookups against external databases or APIs to check referential integrity (e.g., does this Customer ID exist?). Clear, row/cell-level error reporting is also essential.

Can I transform CSV data (clean, calculate, restructure) without writing code?▼

Yes. Many modern data transformation software solutions and data onboarding platforms offer no code data transformation capabilities. These typically provide visual interfaces (like drag-and-drop workflows or function palettes) allowing users to define cleaning steps (trimming, case changes), perform calculations, apply conditional logic, split/join columns, etc., without programming. This makes them some of the easiest data transformation software for common tasks. However, the best platforms often also allow injecting custom code (like Python) for maximum flexibility when needed for highly complex scenarios.

How can AI help with the tedious task of CSV data mapping?▼

AI data mapping tools significantly accelerate the process. They analyze column headers and sample data in your source CSV and compare them to your target schema (database table, API definition). Based on name similarity, data patterns, and learned associations, the AI suggests likely mappings (e.g., automatically proposing that 'Cust_FName' maps to 'FirstName' and 'PurchaseDt' maps to 'OrderDate'). This turns data mapping automation from a fully manual task into one where users primarily review, approve, or slightly adjust AI-generated suggestions, saving immense time, especially for files with many columns.

What exactly is data onboarding and why is CSV so central to it?▼

Data onboarding is the critical process of importing external data—often from new customers, partners, or acquired systems—into your primary business platform or application. CSV is frequently the format provided by the external party because it's a common, simple export option for many systems. However, this incoming CSV data rarely matches the target system's schema or quality standards perfectly. Therefore, the onboarding process must involve robust parsing, data mapping, extensive customer data validation against the target system's rules, and often significant data transformation before the data can be successfully loaded and used. This complexity makes effective data onboarding tools or comprehensive data onboarding solutions essential for achieving efficient, accurate, and repeatable onboarding workflows.

The visual data transformation platform that lets implementation teams deliver faster, without writing code.

Start mappingNewsletter

Get the latest updates on product features and implementation best practices.