CSV Data Transformation for Implementation Teams

A Practitioner's Guide to Standardizing Client Data for Import

Executive Summary for Implementation Leads

- The Problem: Client CSV data is rarely import-ready. Manual cleaning in Excel risks data corruption (e.g., dropped leading zeros), while custom Python scripts create technical debt and maintenance overhead.

- The Solution: Moving from ad-hoc scripts to Data Onboarding Infrastructure. Modern platforms combine visual mapping for speed with code for complex edge cases.

- Key Capabilities Required: Implementation teams need tools that support external lookups (enrichment), conditional logic, and robust validation before the data touches the target system.

Implementation teams, data migration specialists, and data onboarding professionals face a constant challenge: client data is never system-ready. Tasked with loading external data into systems like Salesforce, NetSuite, Workday, or custom applications, the bottleneck isn't parsing the file structure. It's standardizing the chaos within it.

Failed or inaccurate data onboarding directly impacts project timelines and client satisfaction. Relying on basic csv upload tools or manual Excel work is insufficient for enterprise requirements.

This guide provides a technical deep dive into CSV data transformation. We illustrate common csv data cleaning steps and csv data validation techniques using a concrete scenario: transforming a messy contact list CSV into a standardized format ready for CRM import.

We compare three approaches: traditional spreadsheets (Excel), scripting (Python/SQL), and specialized Data Onboarding Infrastructure like DataFlowMapper.

The Scenario: 'source_contacts.csv'

Imagine receiving 'source_contacts.csv' from a new client. It contains typical inconsistencies: mixed date formats, combined names, and missing region data.

Try DataFlowMapper free in your browser and follow along. Click here to signup

Download the example files: source_contacts.csv | destination_template.csv

Source Data ('source_contacts.csv'):

Full Name,email address,company,"Registered Date",Notes,ZIP

"Alice Wonderland","alice@example.com ","Wonderland Inc.","10/05/2024","Met at conference, interested in Product A",01234

Bob The Builder,bob@construct.co,,"5-May-24","Needs follow-up",98765

"Charlie Chaplin","charlie@silentfilms.net","Chaplin Studios","20240515","VIP",NULL

Diana Prince,diana@themyscira.org,Amazon Corp,"May 20th, 2024",, 54321

"Edward Scissorhands","edward@cuttingedge.com ","","2024-05-22","Referral",N/A

Fiona Shrek,"fiona@swamp.org","Far Far Away LLC","2024-05-23","Existing Customer",12345

"George Jetson","george@spacely.com","Spacely Space Sprockets, Inc.","6/1/2024","Inquired via website",90210

"Harry Potter","harry@hogwarts.edu","Ministry of Magic","20240602","Needs demo",00001

"Indiana Jones","indy@archaeology.org","Marshall College","June 5th, 2024","Sent artifacts",67890

"James Bond","007@mi6.gov.uk ","MI6","2024-06-07","Top Secret",SW1A 2AH

Target Requirements:

- FirstName/LastName: Split from 'Full Name'.

- RegistrationDate: Standardized to 'YYYY-MM-DD'.

- LeadSource: Derived from 'Notes' (If 'conference' in notes, set to 'Conference').

- Region: Enriched via external lookup based on 'ZIP'.

Challenge 1: Standardizing Inconsistent Data Types (Dates & ZIPs)

Client data often treats ZIP codes as numbers (dropping leading zeros) and dates as free text.

- 'ZIP': Values like '01234' become '1234' if opened in Excel.

- 'Registered Date': A mix of '10/05/2024', '5-May-24', '20240515'.

Comparing Approaches: Handling Data Types

-

Excel:

- ZIP: Requires the 'Data > Get Data > From Text/CSV' wizard. If a user double-clicks the file, the leading zero is lost immediately.

- Dates: Parsing mixed formats requires brittle nested formulas.

=TEXT( IF(ISNUMBER(D2), DATE(LEFT(D2,4),MID(D2,5,2),RIGHT(D2,2)), /* Handles YYYYMMDD */ IF(ISNUMBER(DATEVALUE(D2)), DATEVALUE(D2), /* Handles recognizable formats */ IFERROR(DATEVALUE(SUBSTITUTE(LEFT(D2,FIND(" ",D2)-1),"th","")), /* Handles 'Dayth' */ "INVALID_DATE"))), "YYYY-MM-DD")- Risk: High probability of irreversible data corruption. Formulas are hard to maintain.

-

Python (Pandas):

- Method: Explicit control. Read 'ZIP' as a string to preserve zeros. Use 'pd.to_datetime' for flexible date parsing.

import pandas as pd # Explicitly read ZIP as string to preserve leading zeros df = pd.read_csv('source_contacts.csv', dtype={'ZIP': str}) # Attempt flexible date parsing, coercing errors to NaT df['RegistrationDate_dt'] = pd.to_datetime(df['Registered Date'], errors='coerce', infer_datetime_format=True) df['RegistrationDate'] = df['RegistrationDate_dt'].dt.strftime('%Y-%m-%d')- Risk: Requires setting up environments and maintaining scripts for every client.

-

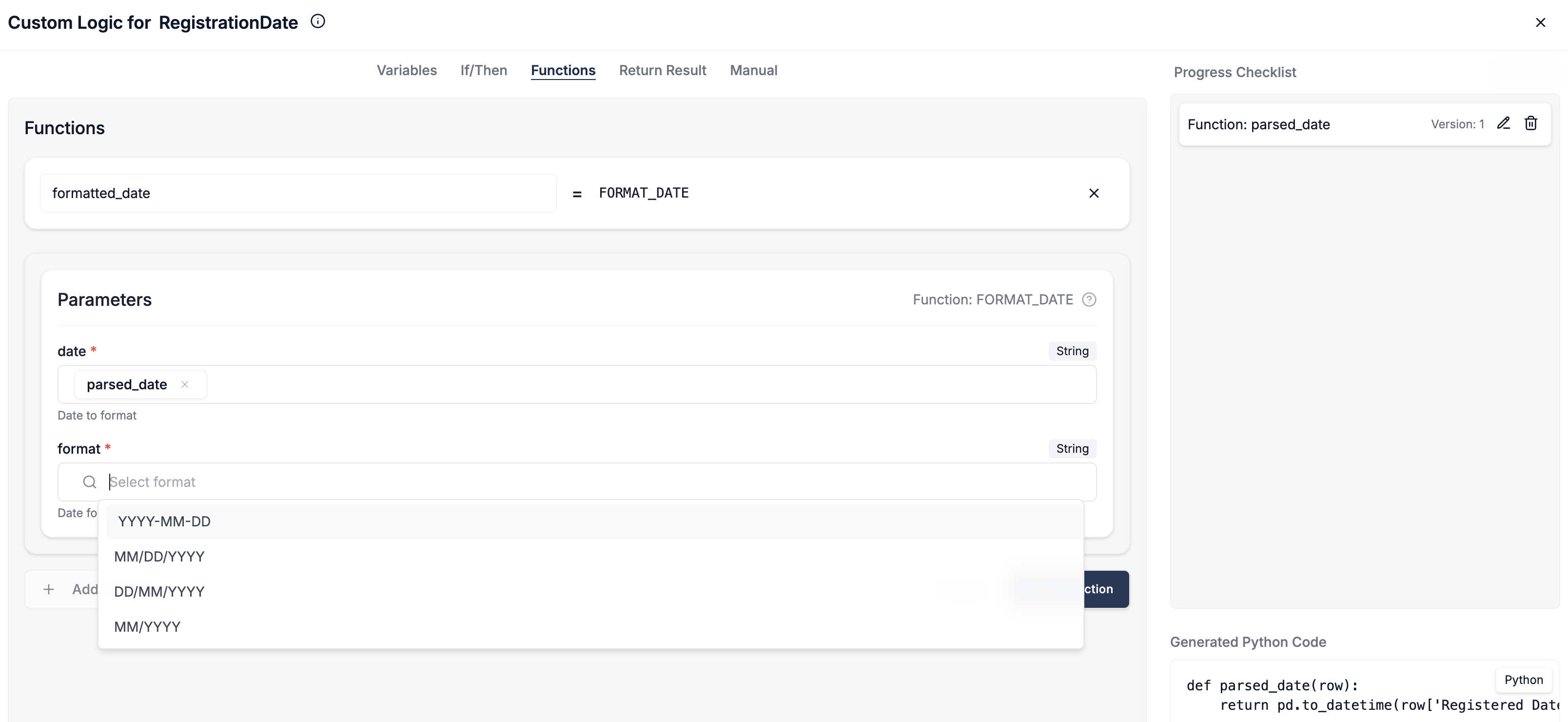

DataFlowMapper Advantage:

- Strict Typing: The platform separates parsing from transformation. Mapping 'ZIP' to a Text field preserves the exact string.

- Native Functions:

TO_DATEintelligently parses common date formats without complex logic.- Add Function:

TO_DATE(Registered Date) - Add Function:

FORMAT_DATE(result, "YYYY-MM-DD")

- Add Function:

Challenge 2: Applying Conditional Logic (Deriving LeadSource)

The CRM requires a 'LeadSource'. If 'Notes' contains "conference", set to 'Conference'; otherwise 'Event'.

Comparing Approaches: Handling Conditional Logic

-

Excel:

- Relies on

IF+ISNUMBER(SEARCH(...)). Nesting multiple conditions makes formulas unreadable.

=IF(ISNUMBER(SEARCH("conference", E2)), "Conference", "Event") - Relies on

-

SQL:

- Uses

CASE WHENstatements. Readable for simple cases, but logic is buried in queries.

SELECT CASE WHEN LOWER(Notes) LIKE '%conference%' THEN 'Conference' ELSE 'Event' END AS LeadSource - Uses

-

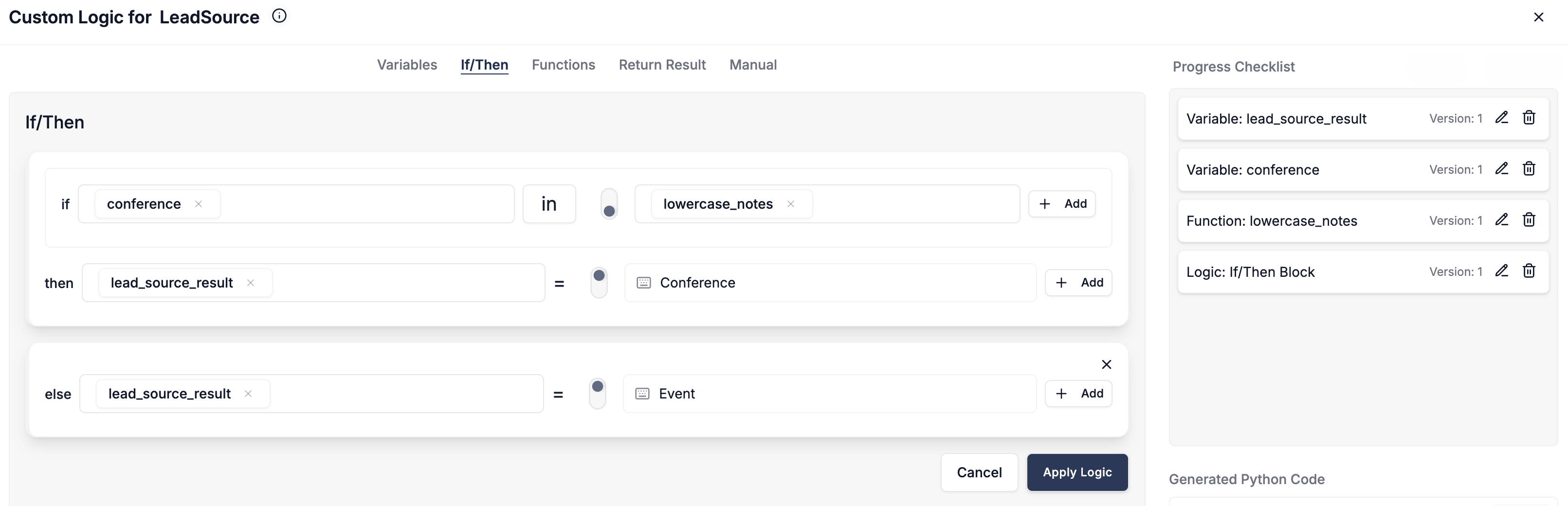

DataFlowMapper Advantage:

- Visual Logic Builder: Provides a structured interface for business rules.

- IF 'Notes' contains 'conference' (case-insensitive)

- THEN 'LeadSource' = 'Conference'

- ELSE 'LeadSource' = 'Event'

- AI Logic Assist: For complex rules, describe the requirement in plain English. The AI generates the corresponding visual logic blocks or Python code, accelerating development.

- Visual Logic Builder: Provides a structured interface for business rules.

Challenge 3: Enriching Data with External Lookups (Region from ZIP)

The target CRM needs a 'Region' derived from 'ZIP' using an external database or API.

Comparing Approaches: Handling External Lookups

-

Excel:

- Limited to

VLOOKUPwithin the workbook. Cannot query external APIs or databases directly. Requires manual data staging.

- Limited to

-

Python:

- Requires writing HTTP requests or SQL queries. Developers must handle authentication, rate limiting, and error handling.

def get_region_from_api(zip_code): try: response = requests.get(f"https://api.example.com/zip/{zip_code}", headers=auth) return response.json().get('region') except requests.exceptions.RequestException: return 'API Error' -

DataFlowMapper Advantage:

- 'remotelookup' Function: Purpose-built for enrichment. Connections are configured securely once.

- Implementation:

region = remotelookup(source="ZipInfoAPISource", parameters={"zipParam": row["CleanedZIP"]}, return_field="RegionName") - Benefit: Eliminates "VLOOKUP hell" and complex scripting. Lookups are a native part of the transformation flow.

Challenge 4: Restructuring Data (Splitting Full Name)

Splitting 'Full Name' into 'FirstName' and 'LastName' requires handling variations like titles and suffixes.

Comparing Approaches: Handling Restructuring

-

Excel:

- "Text to Columns" fails on middle names. Formulas to find the last space are notoriously complex.

-

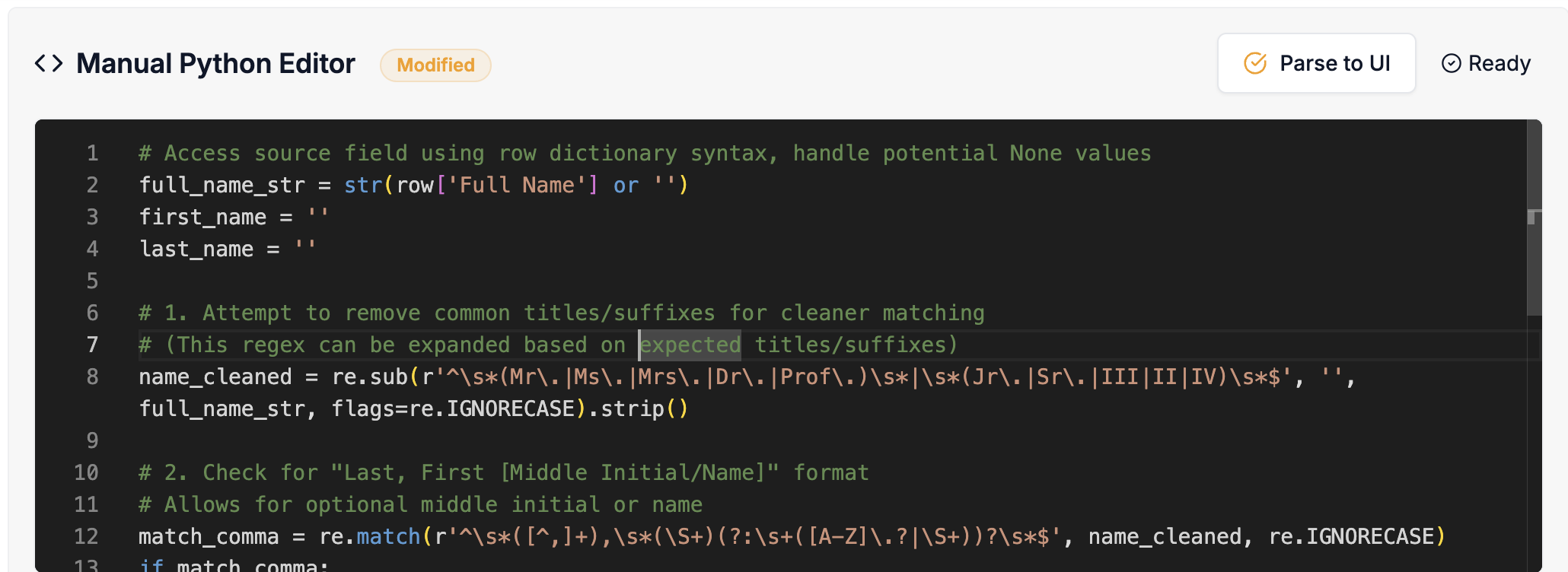

DataFlowMapper Advantage (Hybrid):

- Python IDE: For robust parsing, DataFlowMapper integrates a full Python IDE. You can use standard libraries like

re(regex) directly in the mapping.# Python code within DataFlowMapper's Logic Builder import re name = str(row['Full Name'] or '') # Remove common titles clean_name = re.sub(r'^\s*(Mr\.|Ms\.|Dr\.)\s+', '', name, flags=re.IGNORECASE).strip() parts = clean_name.split() result = parts[0] if parts else '' - Reusability: This logic can be saved as a template and reused across projects, ensuring consistent handling of edge cases.

- Python IDE: For robust parsing, DataFlowMapper integrates a full Python IDE. You can use standard libraries like

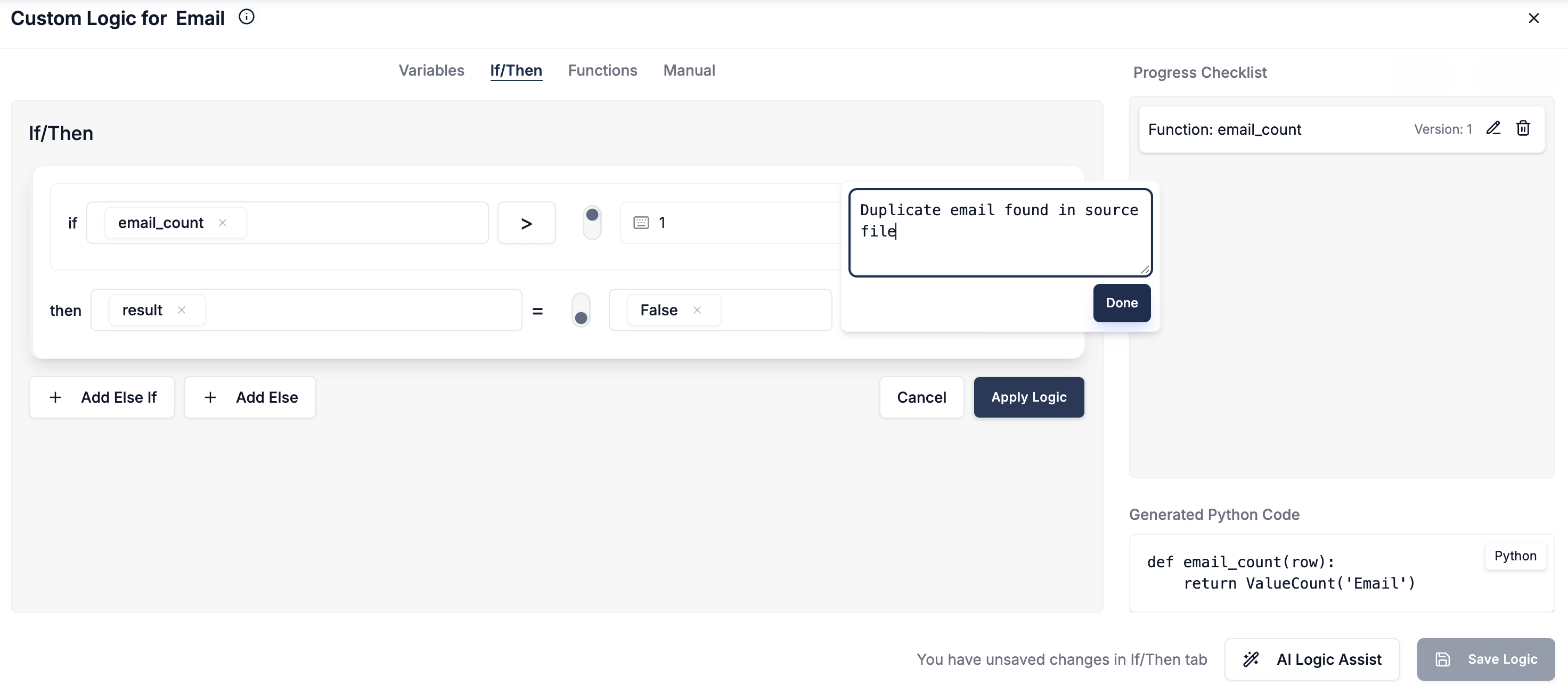

Challenge 5: Robust Data Validation (Email Format & Duplicates)

Validating data before import is the only way to prevent database pollution.

Comparing Approaches: Handling Validation

-

Excel:

- Conditional Formatting only flags errors visually. It does not stop bad data from proceeding.

-

Python:

- Requires writing custom validation functions and building reporting mechanisms to output errors to a readable format.

-

DataFlowMapper Advantage:

- Dedicated Validation Engine: Validation is a distinct stage applied after transformation.

- Capabilities:

- Regex Checks: Strict email validation.

- In-File Duplicates:

VALUECOUNT(row['Email']) > 1flags duplicates in the current file. - External Validation:

remotelookupchecks if the email already exists in the target CRM.

- Feedback Loop: Rows failing validation are flagged with specific error messages, allowing teams to correct data at the source before final import.

Choosing Your Weapon: A Comparative Summary for Implementation Teams

| Approach | Pros | Why it fails for Implementation Teams |

|---|---|---|

| Excel | Familiar interface | High corruption risk. Silent errors (dropped zeros), no audit trail, manual & non-repeatable. |

| SQL | Powerful set-based ops | Infrastructure heavy. Requires staging tables; difficult to handle API lookups and file I/O. |

| Python | Unlimited flexibility | Technical Debt. Creates maintenance overhead; requires developer resources for every new client template. |

| DataFlowMapper | Purpose-Built Infrastructure | Combines visual speed with code power (Python/Regex). Native validation and enrichment. Repeatable. |

Conclusion: Stop Building Custom Scripts

For implementation teams, data transformation is not a one-off task; it is a repeatable process that demands reliability. While Python offers power and Excel offers accessibility, neither is designed as collaborative infrastructure for client data onboarding.

DataFlowMapper bridges this gap. It provides the no-code speed for standard mappings and the low-code power for complex business rules. By standardizing on a platform, implementation teams can stop debugging scripts and start delivering faster migrations.

Standardize your implementation workflow. Build your first reusable mapping in the Logic Builder today.

Frequently Asked Questions

Why is data onboarding infrastructure better than Python scripts?▼

While Python scripts are powerful, they create technical debt. Infrastructure provides a standardized, visual environment that allows non-engineers to build and validate logic, reducing the dependency on individual developers for every migration project.

How can I automate CSV data cleaning without code?▼

Specialized data onboarding platforms like DataFlowMapper use visual logic builders. These allow implementation teams to define cleaning rules (like date standardization or email validation) once and apply them automatically to every new client file.

What is the best way to validate client data before import?▼

The most robust method is using a dedicated validation engine that runs post-transformation but pre-import. This ensures data is checked against business rules (e.g., regex for emails, unique constraints) and provides specific error reports before it ever touches your destination system.

The visual data transformation platform that lets implementation teams deliver faster, without writing code.

Start mappingNewsletter

Get the latest updates on product features and implementation best practices.